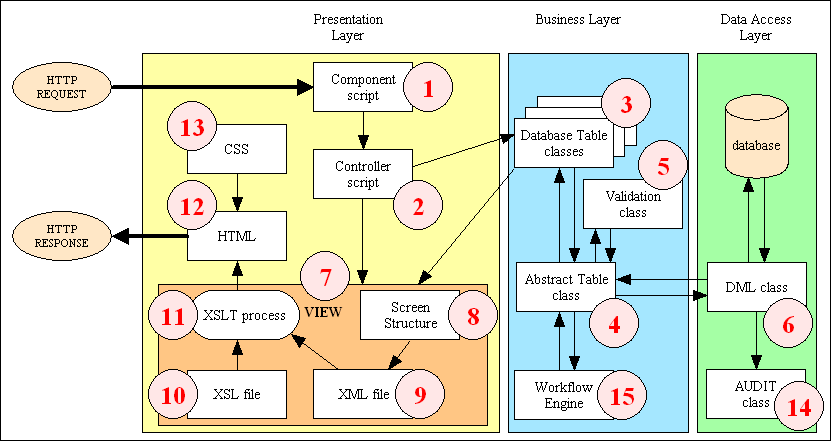

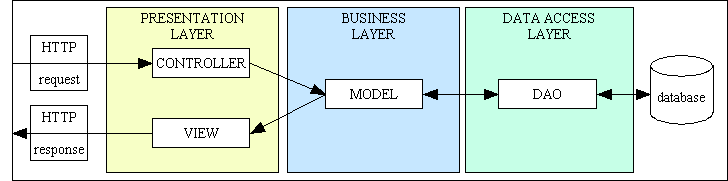

Figure 1 - Radicore Infrastructure Overview

Every once in a while I am told by a developer who looks at my code that he considers it to be nothing but crap. Although the actual wording may be different - your code is unstructured, unreadable, unmaintainable, you don't understand design patterns, yadda yadda yadda - depending on whether the message is posted to a public forum or by private email, the sentiment is the same. The latest criticisms were posted as comments to a Sitepoint article called The PHP 7 Revolution: Return Types and Removed Artifacts, specifically post #152 which lists the following "amateur mistakes":

SQL injection vulnerabilities. Mail header injection vulnerabilities. Global variables up the wazoo. Control logic mixed with DB logic mixed with validation logic mixed with... pretty much every other kind of logic.

Further criticisms (more like attacks and personal insults, actually) have been provided in the following recent Sitepoint discussions:

After reading the numerous criticisms you might also think that my code is crap, but if you looked closer you would actually see that each accusation is totally false. My critics are not making statements of fact, they are merely being echo chambers for outdated ideas. Let me step through each of them and explain why.

If you look at securephpwiki.com you will see an example of code which exposes this exploit. It suggests the following as solutions, either magic_quotes_gpc (which was deprecated in 5.3 and removed in 5.4) or addslashes(). It you look at that sample of my code you will see that I actually use addslashes(), so where is the vulnerability? Note only that, but where a database extension provides its own method of escaping special characters, such as MySQL's real_escape_string, I actually use that within my data access object.

If you look at securephpwiki.com you will see an example of code which exposes this exploit, with a selection of possible solutions.

In the first place there is nowhere in that code where I am sending an email, so where exactly is the vulnerability?

In the second place where I actually do send out an email using details supplied by the user I have already incorporated the solution which uses the regular expression (although I actually use preg_match() as eregi() has been deprecated).

For a definition of global variables please refer to this wikipedia entry.

Whenever somebody says "X is bad", where X in the software world can be anything from global variables to inheritance to dependency injection to design patterns to <name your poison here> it is usually somebody mis-quoting a statement made by somebody else who said "X can be bad when ...." and thereby changing the statement from conditional to unconditional. Just because it is possible for a bad programmer to misuse "X" and create bad code, that is no reason to prevent a good programmer from using "X" when appropriate to produce good code. How many times has a doctor made the statement "Too much X is bad for you" only to have someone drop the "too much" qualification and report it as "X is bad for you", thus implicitly changing "too much" to "any quantity". Consuming too much water can be bad for you, but does that mean that we should all stop drinking water?

Whenever I come across such a blanket unconditional statement I am now old enough and wise enough to assume that somebody, usually out of ignorance and not malice, has accidentally dropped the condition that went with the original statement and has therefor changed its true meaning. Whenever I see a blanket rule like this I now ask the question "Under what circumstances is X bad?". If the person quoting that rule cannot justify its existence then it has no right to exist, so I ignore it. If the conditions which make it bad no not exist in my code then it does not apply to my code code, so I ignore it.

When you consider the fact that every programming language ever written has support for global variables, I am sure that if they were really bad that support would have been dropped, just like some modern languages no longer support the GOTO statement. When I search for the exact conditions under which global variables are bad I come across arguments such as the following:

This would be a prime example of very bad programming as every good OO programmer should know that an object's state should always be kept inside the object.

Each global variable should be used for one thing and one thing only. Having the same variable used for different things in different parts of the program is a sign of sloppy workmanship on the part of the programmer and is therefore NOT the fault of global variables in general. In my early COBOL days I encountered quite a few programs where the programmer always created a block of global variables called flags, indicators or switches with the names SW1, SW2, SW3 and so on. Each switch could hold a collection of possible values, sometimes just ON or OFF or sometimes a range of values. The definition of each switch could never describe the range of possible values and their meanings, so you had to hunt through the code to find where values were assigned and hope that the code contained a comment as to why that particular value was being assigned at that particular point. That is why when I composed my own programming standards I insisted on the use of condition names which enabled all these issues to be eliminated in one fell swoop.

This depends on the capabilities of the programming language which you are using. For example, in my COBOL days a user could start the online program at 9am, at which point the necessary memory space was assigned, and this space would be used continuously by whatever subprograms were run during the working day. This memory space would not be deallocated until the user terminated his copy of the online program. PHP, on the other hand, is entirely different. Computer memory is not not assigned at the start of the user's working and released at the end. As each web page is run it always starts off without any shared memory whatsoever, and when it finishes any memory which has been allocated is automatically released. It should therefore be obvious that any global variable which is created can ONLY be created during the execution of the current script, and any programmer who is incapable of locating where a particular variable is assigned is, in my humble opinion, a pretty poor programmer indeed.

Agreed, but that is only a problem if a poorly trained programmer makes it a problem.

Then you do not understand what Coupling actually means, so that criticism is bogus.

You can only pollute something if you over-use, mis-use or an-use that something, so the simple answer is properly, sparingly and intelligently.

I have been writing and testing programs which use global variables for 40 years and I do not recall any problems resulting from their use. Except, that is, in one early version of a particular language which used numbered, not named, global variables to pass arguments from one component to another. The problem arose when the calling component loaded valueX into variable $29 and valueY into variable $30 while the receiving component used variable $30 for valueX and variable $29 for valueY.

If you look at securephpwiki.com you will see a description of a vulnerability which is caused by the use of register_globals. This was deprecated in 5.3 and removed in 5.4, but I stopped using it much earlier as soon as heard that it could cause problems. So where exactly is the vulnerability in my code?

Globals in PHP are not really globals in the same sense as they are in other languages. This because the $GLOBALS variable is bound to the request and not kept between requests, which would make it difficult to identify where a global variable was modified and where it was referenced. This makes the $GLOBALS variable no different from any other variable inside a request - it always starts off as being empty at the start of request, and is always discarded at the end of the request. This means that anything within the $GLOBALS array was created within, and may only be referenced within, the current request. How can that be such a bad thing? It is surely no more of a problem than the $_GET, $_POST, $_SERVER and $_SESSION arrays.

Some people advocate the use of variables inside a global object, but as far as I am concerned this does not offer any benefits over the $GLOBALS superglobal, and as it requires extra effort it seems like wasted effort to me, so I'd rather not bother.

Global variables exist in some form or other in every programming language. They are just a tool, just like every other feature of the language, and just like any tool they can be used in both appropriate and inappropriate circumstances. If someone uses a tool and screws it up then it is is a bad workman who blames his tools instead of his own poor workmanship.

Firstly, the way I split my application logic into different components is explained in You have not achieved the correct separation of concerns. This summarises my implementation of the 3-Tier Architecture and the Model-View-Controller design pattern.

Secondly, it is very important to understand the difference between logic and information, which is why I wrote Information is not Logic just as Data is not Code. For example, take the following code snippet:

$this->sql_select = '.....'; $this->sql_from = '.....'; $where = '.....'; $fieldarray = $this->getData($where);

Anyone who describes that as "data access logic" is clearly seeing what isn't there. There are some lines of code which load strings into variables, and a call to a getData method, but this does not touch the database. If you look at this UML diagram you will see that the getData method in the Model will call the _dml_getData method which passes control to a separate DML (Data Manipulation Language) object, which is also known as the DAO (Data Access Object). This is the object responsible for constructing SQL queries and sending them to the relevant database using code similar to that shown in the following code snippet:

$query = "SELECT SQL_CALC_FOUND_ROWS $select_str FROM $from_str $where_str $group_str $having_str $sort_str $limit_str"; $result = mysqli_query($this->dbconnect, $query);

There is nothing in snippet #1 which touches the database. You cannot even tell which database extension is being used to access which database, so it cannot be described as "data access logic". The opposite is true with snippet #2.

I use this mechanism in order to provide me with the ability to construct SQL queries which are more complex than the simple SELECT * FROM <tablename>. While other developers devise more obfuscated ways to achieve the same thing, such as using a type of pseudo-SQL which is then translated into proper SQL in an Object Relational Mapper, I prefer the direct approach.

The accusation that each Model class contains validation logic when it should not is completely wrong. Validation logic for an entity is considered to be part of the business logic for that entity, so should therefore be defined within the Model class which you construct for that entity. You should not put any business logic in any place other then the Model - not in a Controller, not in a View, and not in a DAO.

The accusation that each Model class contains formatting logic is completely wrong. In the context of the 3-Tier Architecture the presentation/display logic in the Presentation layer is responsible for transforming the data obtained from the Business layer from its internal format, which is a PHP array, into a different format which is more presentable to the user, such as HTML, CSV or PDF. There is no code in the Business layer which performs this transformation, so it is completely wrong to say that there is presentation logic in the Business layer. The "formatting" logic in the Business layer does not transform the PHP array, it does nothing but format dates and decimal numbers within the array according to the user's language preferences, and that is part of the business logic.

The accusation that each Model class contains workflow logic is completely wrong. There is a small amount of code there only to decide if it is necessary to pass control to a separate workflow object. Having code which passes control to another object is not the same as carrying out the responsibilities of that object.

The actual accusation is:

You have a class with over 120 methods and 9,000 lines, therefore it must be doing too much. It must surely be a "God" class.

For the correct definition of a "God" class please refer to this wikipedia entry which clearly states that this is where all (or most of) a program's functionality is contained in a single component. This is also an example of a monolithic or single tier architecture. In his article 9 Anti-Patterns Every Programmer Should Be Aware Of the author Sahand Saba describes it as:

Classes that control many other classes and have many dependencies and lots of responsibilities.

My code does not fit the description of a "God Object" for several reasons:

If I am using well known design patterns to provide high levels of reusability in my code then how can anyone say that I am wrong based on nothing more than the ability to count?

If my critics would simply take the time to engage their tiny brains before opening their big mouths they would be able to see that my software is based around a combination of the 3-Tier Architecture and Model-View-Controller design pattern, as shown in figure 1:

Figure 1 - Radicore Infrastructure Overview

Note that all the components in the above diagram are clickable links which will take you to the descriptions of those components.

The so-called "God" class, which is item #4 in the above diagram, is not even a concrete class but is an abstract class which is inherited by every Model class (item #3 in the above diagram) in the Business layer. You should also notice that this structure has separate components for the following:

So far from being a "God" class that does everything it should be obvious that:

The methods in this class fall into one of the following categories:

It should also be obvious that each object in the business layer does NOT have many dependencies, it only has one - the data access layer.

There may be over 9,000 lines of code in this abstract class but these are split across 254 methods, so that gives an average of about 35 lines per method. These methods can be categorised as follows:

The true definition of a god class contains the phrase Most of such a program's overall functionality is coded into a single 'all-knowing' object

. While you may think that 9,000 lines is a lot, it is just a small part of the 53,000 LOC that exist in my reusable library. This means that my abstract class contains 9/53rds or 17% of the overall functionality. I don't know who taught you maths, but 17% cannot be described as "most" in anybody's language.

You should also be aware of the following points:

None of the methods in my "monster" class contains logic which belongs in another object, so none of its methods can be moved to another object. Because I have all of my business logic in the Model and none in any Controller or View I have what is known as a "fat model, skinny controller" combination which everyone knows is far better than a "skinny model, fat controller".

If you look close enough you should see that this arrangement does not match the description controls many other classes and has many dependencies and lots of responsibilities

for the simple reason that the only dependencies in each Model class are the Data Access Object (item #6 in the above diagram) and the Validation object (item #5 in the above diagram).

Any notion that this class breaks the Single Responsibility Principle (SRP) based on nothing more than the count of methods or lines of code is therefore unscientific and unreliable. It is just as stupid as saying "your class contains only ten methods, therefore it must surely be following SRP". The fact that these counts are higher than those which you have previously encountered just means that you have never worked on an application which is as sophisticated or functionally rich as mine. If you only ever work on puny applications then you can get away with your puny rules, but bigger applications need bigger rules and developers with bigger ideas. Every one of the methods in that class is closely related to other methods, which means that they form a cohesive unit. If you do not understand what "closely related" means then consider these facts:

As you can see establishing "closely related" takes more brain power than simply being able to count.

Robert C. Martin has written several articles on SoC/SRP (see here, here and here) in which he clearly identifies only three responsibilities which should be separated - GUI logic, business logic and database logic. All my Model classes, which inherit from my "monster" abstract class, follow this principle by virtue of the fact that each is responsible for the business rules associated with a single database table. Note the use of the words "single" and "responsible" in that description. In none of these articles does he mention that a class should be limited by the count of methods or lines of code, just by the responsibility that the class carries out. Even if he did he would be wrong as that would violate the rule of encapsulation which specifically states that ALL the methods and ALL the properties should be contained in a SINGLE class.

The problem with most developers today is that they do not understand what the Single Responsibility Principle (SRP) actually means, when to start applying it and when to stop applying it. For a definition of "responsibility" take a look at what Robert C. Martin (Uncle Bob) wrote in his article Test Induced Design Damage?

How do you separate concerns? You separate behaviors that change at different times for different reasons. Things that change together you keep together. Things that change apart you keep apart.

GUIs change at a very different rate, and for very different reasons, than business rules. Database schemas change for very different reasons, and at very different rates than business rules. Keeping these concerns (GUI, business rules, database) separate is good design.

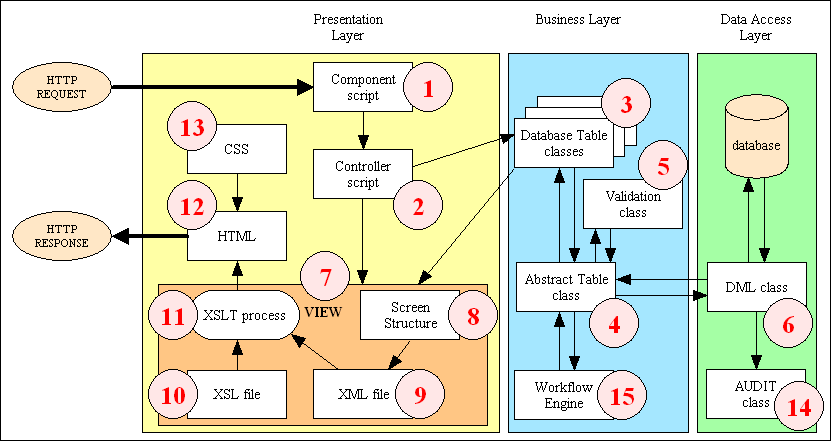

This quote is clearly a description of the 3-Tier Architecture on which my framework is based as this has separate layers for Presentation/GUI logic, Business logic and Data Access logic. This method of modularising an application is also described by Martin Fowler in his article PresentationDomainDataLayering, so it is an accepted and common practice. In addition to this I have also applied the MVC design pattern by splitting the Presentation layer into two separate parts - the Controller and the View, which results in the structure shown in Figure 2.

Figure 2 - The Model-View-Controller structure

Having used SRP to identify the different responsibilities I have created separate classes to deal with each of those responsibilities according to the rules of encapsulation. After that point I stopped applying SRP as to go any further would be to go too far and change a modular system of cohesive units into a fragmented system in which all unity and cohesion would be lost. When splitting a large piece of code into smaller units you have to strike a balance between cohesion and coupling. Too much of one could lead to too little of the other. This is what Tom DeMarco wrote in his book Structured Analysis and System Specification:

Cohesion is a measure of the strength of association of the elements inside a module. A highly cohesive module is a collection of statements and data items that should be treated as a whole because they are so closely related. Any attempt to divide them would only result in increased coupling and decreased readability.

Having methods in the same class which are not related would be wrong, just as having methods which are related but placed in different classes would be wrong. It is a question of balance, so when applying any software principle the intelligent developer has to know if and when to start applying it but also when to stop. This cannot be taught, it can only be learned. Sadly, too many of today's developers can do nothing except echo what they have been taught. They are not prepared to think for themselves, they are not prepared to question what they are taught, and they are not prepared to experiment and try out new ideas. All they can do is perpetuate the myths and legends spewed forth by these snake oil salesmen, and they cannot understand why theory and practice are not the same.

Unless you have your head stuck so far up your a**e you can contemplate your navel from the inside you should clearly see that the architecture shown in Figure 2 is anything but monolithic as it has been broken down into a collection of separate modules each with their own distinct responsibility. If you still don't understand what it means then let me give you the ten cent tour:

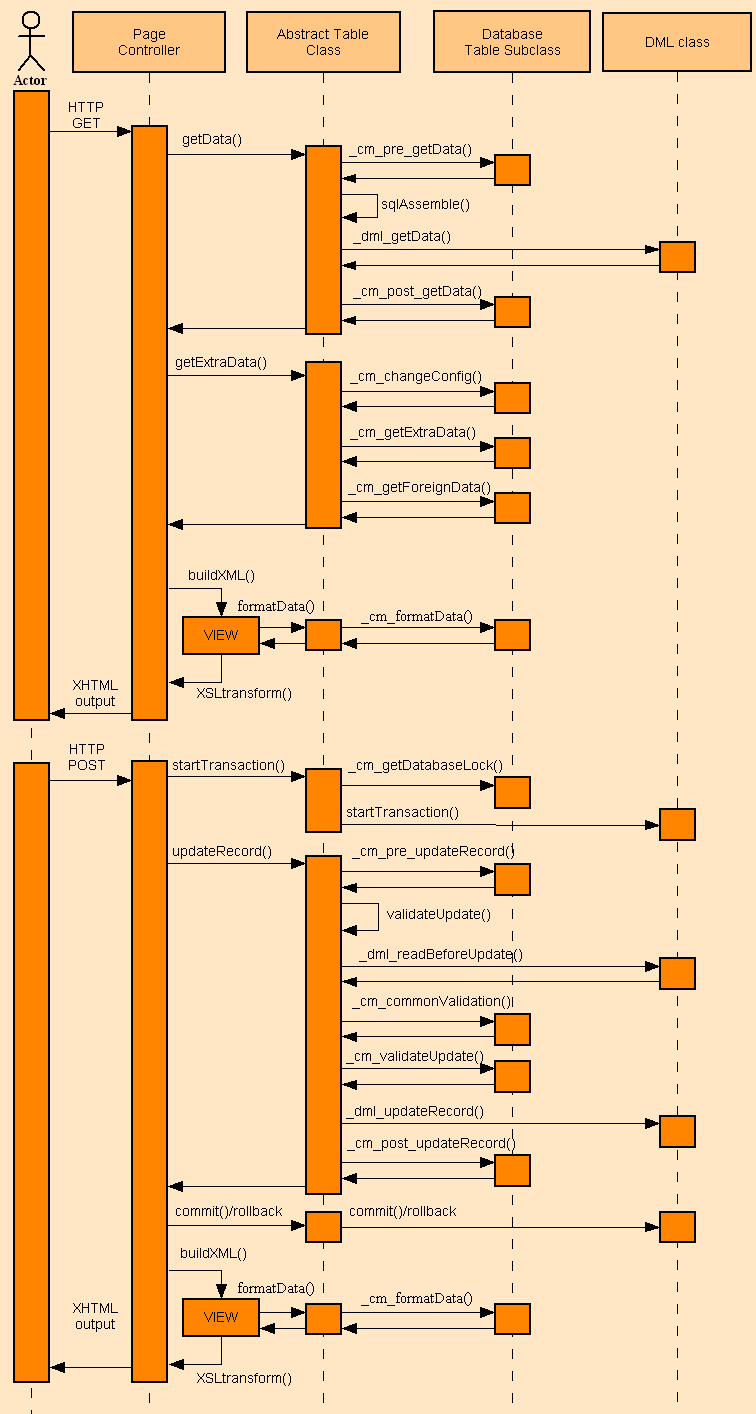

Please note that a method called by a Controller may involve calling a series of sub-methods which break that processing into logical steps, as shown in the UML diagram in figure 3:

Figure 3 - UML diagram for Update 1

Note that the prefix on the method name denotes its usage:

For those of you who cannot digest information which is presented in a picture, there are a thousand words in Table 1:

When a method is called from the Controller it sometimes leads to calls on one or more internal methods, and sometimes touches the database through the Data Access Object.

I have not numbered the duplicate entries for _getDBMSengine(), so you should see that there are 42 methods just to complete a simple update of a single record. Also note that 20 of these methods (the ones with the "_cm_" prefix) are customisable and exist only that they may be copied to a concrete class and filled with code in order to override the default behaviour. If you think that 42 methods for a simple update task is too much then either you have difficulty counting above 10 without taking your shoes and socks off, in which case you are an idiot and your opinion does not count, or quite obviously you have never worked on a sophisticated and feature-rich enterprise application, so you are not qualified to have an opinion on such matters.

Every one of those methods is there for the same reason - to deal with data which is either going into or coming out of a database table - and belongs in the Model/Business layer and not in the Controller/View/Presentation layer. If you also look closely you should see that, with limited exceptions, all those methods have the table data as an argument. This data is also available as $this->fieldarray, so if all those methods perform different operations on the same data then how can you say that they don't belong together in the same class? To do otherwise would surely be a violation of encapsulation.

If I can justify having 42 methods in my Model class just to cater for one update pattern, then it should not be unreasonable to have additional methods to deal with the other 50 Transaction Patterns in my library, which means that 120 methods in total is not so ridiculous after all.

It should be clear to anybody but the blind that all the code I have within my "monster" abstract table class is there because it is part of the Model and cannot logically be moved to the Controller, View or DAO. If there is no logical reason to move the code elsewhere then you are wasting your time by inventing an arbitrary rule that has no basis in logic. Whether you like it or not each of those 120 or so methods in my "monster" class is there to perform an operation in the business layer, and by splitting it into artificially small units I would be taking a cohesive modular system and turning it into a disjointed fragmented system, and everyone knows that such a system would be more difficult to read, more difficult to understand, more difficult to maintain and more difficult to enhance. So said Tom DeMarco in his book Structured Analysis and System Specification:

Cohesion is a measure of the strength of association of the elements inside a module. A highly cohesive module is a collection of statements and data items that should be treated as a whole because they are so closely related. Any attempt to divide them would only result in increased coupling and decreased readability.

If you look carefully at my abstract table class you will see that it only has the following dependencies:

Since when can this small number of dependencies be classed as "too many"?

One of the aims of OOP is to increase code reuse, and here I have an abstract class which enables me to share 9,000 lines of code among 300+ model classes using the single word "extends", so what can be wrong with that?

This claim, made in this sitepoint post completely contradicts what was said in this sitepoint post and this post and this post and this post and this post and this post and this post and this post and this post and this post and this post. If you look very carefully you will see that all these posts were made by the same person, a certain s_molinari.

How is it possible for the same person to first accuse me of creating a monster "god" class which tries to do everything, then complain that it also fits the description of an anemic domain model which does practically nothing? The wikipedia article describes this as follows:

Anemic domain model is the use of a software domain model where the domain objects contain little or no business logic (validations, calculations, business rules etc).

The name "anemic domain model" was first described my Martin Fowler as follows:

The basic symptom of an Anemic Domain Model is that at first blush it looks like the real thing. There are objects, many named after the nouns in the domain space, and these objects are connected with the rich relationships and structure that true domain models have. The catch comes when you look at the behavior, and you realize that there is hardly any behavior on these objects, making them little more than bags of getters and setters. Indeed often these models come with design rules that say that you are not to put any domain logic in the the domain objects. Instead there are a set of service objects which capture all the domain logic. These services live on top of the domain model and use the domain model for data.

The fundamental horror of this anti-pattern is that it's so contrary to the basic idea of object-oriented design; which is to combine data and process together.

If you looked closely at the definition of each concrete table class you would see that it inherits from an abstract table class, and it is this abstract class which provides the standard processing. All data validation is performed by a single validation object which is called by code inherited from the abstract table class. This uses the contents of the $fieldspec array for each class to validate that each field within $fieldarray matches its specifications. By virtue of the fact that every method called from a Controller on a Model is an instance of the Template Method Pattern means that any business rules can be inserted into the relevant "hook" methods which can be added to any Model class.

If you look at what I wrote in reply to You have created a monster "god" class you should see that I have correctly broken down my application into separate units - Controllers, Views, Models and Data Access Objects - where all domain logic, which includes data validation and business rules, exists in, and ONLY in, the domain or Business layer. This layer is comprised of a collection of Model classes, one for each database table. The fact that each Model class, when combined with the abstract class, contains logic for data validation, business rules and task-specific behaviour should completely disprove the accusation that it does not do enough, just as the fact that each Model does not contain any control logic, view logic and data access logic should completely disprove the accusation that it tries to do too much.

Accusations that my Model class does either too much or too little shows that my accusers do not really understand what these terms actually mean. Let me enlighten you:

So if the claim that my Model classes do "too much" is false, and the claim that my Model classes do "too little" is also false, where does it place them? Securely in the middle, I would say, and guess what description sits between "too much" and "too little"? You've guessed it - "just right". This, as far as I am concerned, is just about as close to perfection as it is possible to get. It also fits the description of the Goldilocks Principle.

For a definition of the singleton pattern please refer to this wikipedia entry which states the following:

In software engineering, the singleton pattern is a software design pattern that restricts the instantiation of a class to one object. This is useful when exactly one object is needed to coordinate actions across the system.

Note that this description identifies what the singleton is supposed to achieve - having a single instance of an object which can be accessed from multiple places - but it does not identify how this should be achieved. The actual implementation is left entirely to the developer, so I have chosen an implementation which achieves the objectives with the minimum number of adverse side effects. If other developers have problems with singletons then I would suggest that it is their implementation of the pattern which is faulty and not the pattern itself.

When people tell me that singletons are bad they can rarely explain exactly what the problems are supposed to be. After a bit of research I found the following:

They are supposed to, you dummy! The whole idea behind having a singleton is that it is globally accessible from anywhere within your application. It can only be globally accessible if it exists in global scope. Besides, I do not consider that using global namespace is exactly the same as polluting it. It is simply not possible to pass every variable that may be used in the list of arguments which are passed to a function or a method, which is where the careful use of global variables provides a simple solution. Note that the careful use of global variables is NOT the same as indiscriminate use.

This is supposedly because they produce two responsibilities:

I think that this statement is invalid as it relies on what I consider to be a totally perverse interpretation of what the Single Responsibility Principle (SRP) actually means. If a class can only contain methods which are actually used by the object itself then how come a class can contain a constructor and a destructor. A PERSON object cannot construct or destroy itself, so why should it contain methods which perform those operations? Surely those methods are performed ON an object and not WITHIN it, so should be written as construct($object) and destroy{$object) instead of $object->construct() and $object->destroy(). You may think that this idea is totally perverse, but it is merely an extension of a different perverse idea.

Besides, my implementation does not use a getInstance() method within each class, so there is no code within the class which dictates how many instances may exist at any one time.

Sometimes a class is coded so that its constructor is private, which means that it can only be instantiated using the getInstance() method, but guess what? I don't! It is totally up to me whether I use new or getInstance(), the class itself does not have any control over how it is instantiated.

A common example of this problem is where an application was originally coded to only use a single database instance, which then disallowed a future requirement to use more than one instance. A feature of my implementation is that I NEVER instantiate a database instance and then inject it into the object which may want to use it, I wait until I actually want to communicate with the database and only instantiate the object at the last possible moment. As I have complete control over the name which I use for each instance I am able to create more than one instance of the same database server or even have instances for different database servers at the same time.

Not if you use new on the subclass. When I use singleton::getInstance() on the subclass it does not start by firing the getInstance() method on the parent class, it simply executes new on the subclass.

Not in my framework they don't! I do not have Model classes which have to be configured before they can be used, so this is not a problem. Each class has a single configuration except for my database class where each instance is tied to a single database server with its own connection parameters. If I ever require to access more than one database server in the same script then my code is clever enough to use a single separate instance for each server.

So what? This is only a problem if a user of a singleton changes its state which can then cause problems to other users of that singleton. I do not have this problem in my framework as whenever I consume the services of a singleton object I extract whatever state I need from that object and store it locally, and if another user of that singleton does something to change its state it has no side effect on any previous users.

I disagree completely. They are just one method of obtaining a dependent object, but although a dependency is a sign of coupling it is not automatically a sign of tight coupling. If a module interacts with a dependent module through a simple and stable interface and does not need to be concerned with the other module's internal implementation then this fits the description of loose coupling.

As you can see, I do not have the "problems" that other programmers have in their inferior code, so as far as I am concerned singletons are NOT evil at all. Perhaps the real problem lies with their particular implementations and not the concept itself.

You might also want to read what Robert C. Martin has to say on this topic in his article called The Little Singleton. There is also an article called What's so bad about the Singleton? by Troels Knak-Nielsen.

So what? It may come as a surprise to you, but the three fundamental principles of OO are Encapsulation, Inheritance and Polymorphism. That is not just my personal opinion, it is also the opinion of the man who invented the term. In addition, Bjarne Stroustrup (who designed and implemented the C++ programming language), provides this broad definition of the term "Object Oriented" in section 3 of his paper called Why C++ is not just an Object Oriented Programming Language:

A language or technique is object-oriented if and only if it directly supports:

- Abstraction - providing some form of classes and objects.

- Inheritance - providing the ability to build new abstractions out of existing ones.

- Runtime polymorphism - providing some form of runtime binding.

If some idiot is now attempting to redefine what OO means then I shall take great pleasure in ignoring him.

There is nothing wrong with inheritance provided that it is used properly. The problem is that there are too many people who do not understand what "properly" means. A common mistake is to create class hierarchies which are often six or more levels deep, which caused Paul John Rajlich to say the following:

Most designers overuse inheritance, resulting in large inheritance hierarchies that can become hard to deal with.

In the same article he said:

One way around this problem is to only inherit from abstract classes.

Guess which method I use? All my concrete Model classes inherit from a single abstract class, so I don't have complex class hierarchies at all. So not only do I not have the problem caused by complex hierarchies, I have already implemented the preferred solution.

The idea that inheritance breaks encapsulation I also find to be completely nonsensical.

As for the equally ridiculous notion that I should favour composition over inheritance I have already answered that.

Here someone is objecting to the fact that I have created a function called is_True(). Why shouldn't I? This is the code that it contains:

function is_True ($value)

// test if a value is TRUE or FALSE

{

if (is_bool($value)) return $value;

// a string field may contain several possible values

if (preg_match('/^(Y|YES|T|TRUE|ON|1)$/i', $value)) {

return true;

} // if

return false;

} // is_True

As you can see the value "true" can be represented in a variety of ways (boolean, string, numeric). Why? Because different DBMS engines have a different way, or several different ways, of allowing BOOLEAN fields to be defined. In HTML the only boolean control is a checkbox, and when selected the value returned is "on". As I have to cater for all the possible options this makes the code a little complex. When I want to perform this test I can either write out that same block of code again and again, or I can follow the DRY principle and put it into a reusable library. How can this be wrong?

Rather than my approach being described as "too simple" have you ever considered that it is your approach which can be described as "too complex". I have always been a follower of the KISS principle (which is also known as Do The Simplest Thing That Could Possibly Work) which means that I always start with a simple solution and only add complexity when it is absolutely necessary. This approach is supported in the the article KISS With Essential Complexity.

Somebody once told me:

If you have one class per database table you are relegating each class to being no more than a simple transport mechanism for moving data between the database and the user interface. It is supposed to be more complicated than that.

But why exactly should it be more complex than that? For my in-depth response please look at Your approach is too simple and also Why is OOP so complex?.

The problem with complexity was highlighted in this quote from C.A.R. Hoare:

There are two ways of constructing a software design. One way is to make it so simple that there are obviously no deficiencies. And the other way is to make it so complicated that there are no obvious deficiencies.

Martin Fowler, the author of Patterns of Enterprise Application Architecture (PoEAA) wrote:

Any fool can write code that a computer can understand. Good programmers write code that humans can understand.

Here is an alternative translation:

Any idiot can write code than only a genius can understand. A true genius can write code that any idiot can understand.

Or to put in another way:

The mark of genius is to achieve complex things in a simple manner, not to achieve simple things in a complex manner.

The problem with a lot of today's programmers is that they think that if a concept is too simple then anybody could do it, in which case they would not be able to demand such a high salary. These are the people who ignore the KISS principle and instead use the LMIMCTIRIJTPHCWA principle. Is this because they are deliberately making things more complex than they need be, or that they genuinely don't know how to reduce a solution down to its simplest elements?

These two different approaches - either simple or complex - could also be characterised in the following ways:

Followers of this approach do enough to get the job done, produce well structured and readable code, and then stop.

Followers of this approach do enough to get the job done, then spend an equal amount of time in making it "purer", "holier than the Pope", making it use as many design patterns as possible, or apply as many principles as possible.

Just for your amusement I have taken my ideas on keeping software simple and essential and put it in an article called A minimalist approach to Object Oriented Programming with PHP. Hopefully my heretical views will either cause you to have an apoplectic fit or choke on your morning coffee.

Although this criticism has been aimed at me personally, it can just as well be aimed at every other developer in the world. What is my justification for saying that? For the simple reason that Nobody Agrees On What OO Is and there are too many different Definitions For OO. In my article What is Object Oriented Programming I reject the following definitions:

If you think that list of items is bad, then look at Abstraction, Encapsulation, and Information Hiding for different descriptions of each. The big problem with these different descriptions is that each description can be interpreted in a different way, and each different interpretation will be followed by its own set of different implementations. It is at that point that you start getting arguments along the lines of "MY interpretation is right, YOURS is wrong!" and "MY implementation is right, YOURS is wrong!

As well as that collection of possible definitions, there is also a large collection of possible implementation techniques which some people regard as requirements but which I dismiss as optional extras:

This list is discussed in greater detail in A minimalist approach to Object Oriented Programming with PHP. The fact that they are optional means that it is my choice whether I use them or not, and because I consider none of them to be of any value I choose not to use any of them.

If there are that many definitions of OO, and many different ways in which each definition can be implemented, isn't it rather arrogant of someone to say "My definition and implementation of OO are correct, so anything which is different must be incorrect!"

A far as I am concerned the only definition of OO which is worth any consideration is the following:

Object Oriented Programming is programming which is oriented around objects, thus taking advantage of Encapsulation, Polymorphism, and Inheritance to increase code reuse and decrease code maintenance.

As far as I am concerned OOP requires nothing more than the correct application of Encapsulation, Inheritance and Polymorphism, and if any programmer is incapable of writing effective software using nothing more than these three concepts then he/she is just that - incapable.

I have never said that my implementation is the best implementation, or the only acceptable implementation, just that it is different, and the fact that it is different should be totally irrelevant. You may not like my implementation, but as far as I am concerned it is in accordance with these definitions and the results speak for themselves.

All too often I am told that I am not using the right design patterns, or that I have not implemented them properly. It appears that far too many people judge the quality of a piece of software simply by counting the number of design patterns that it uses. This, to me, is a classic sign of pattern abuse.

In the article How to use Design Patterns there is this quote from Erich Gamma, one of the authors of the GOF book:

Do not start immediately throwing patterns into a design, but use them as you go and understand more of the problem. Because of this I really like to use patterns after the fact, refactoring to patterns.One comment I saw in a news group just after patterns started to become more popular was someone claiming that in a particular program they tried to use all 23 GoF patterns. They said they had failed, because they were only able to use 20. They hoped the client would call them again to come back again so maybe they could squeeze in the other 3.

Trying to use all the patterns is a bad thing, because you will end up with synthetic designs - speculative designs that have flexibility that no one needs. These days software is too complex. We can't afford to speculate what else it should do. We need to really focus on what it needs. That's why I like refactoring to patterns. People should learn that when they have a particular kind of problem or code smell, as people call it these days, they can go to their patterns toolbox to find a solution.

A lot of the patterns are about extensibility and reusability. When you really need extensibility, then patterns provide you with a way to achieve it and this is cool. But when you don't need it, you should keep your design simple and not add unnecessary levels of indirection.

This sentiment is echoed in the article Design Patterns: Mogwai or Gremlins? by Dustin Marx:

The best use of design patterns occurs when a developer applies them naturally based on experience when need is observed rather than forcing their use.

The GOF book actually contains the following caveat:

Design patterns should not be applied indiscriminately. Often they achieve flexibility and variability by introducing additional levels of indirection, and that can complicate a design and/or cost you some performance. A design pattern should only be applied when the flexibility it affords is actually needed.

In the blog post When are design patterns the problem instead of the solution? T. E. D. wrote:

My problem with patterns is that there seems to be a central lie at the core of the concept: The idea that if you can somehow categorize the code experts write, then anyone can write expert code by just recognizing and mechanically applying the categories. That sounds great to managers, as expert software designers are relatively rare. The problem is that it isn't true.

The truth is that you can't write expert-quality code with "design patterns" any more than you can design your own professional fashion designer-quality clothing using only sewing patterns.

In my world I will only implement a design pattern if I genuinely have the problem that the pattern was originally designed to solve. The fact that some programmers like to use them everywhere instead of where necessary is no reason why I should follow such an evil practice.

A typical example of this attitude can be found in the Sitepoint discussion at Dependency Injection Breaks Encapsulation. The original idea behind Dependency Injection, as described in Robert C. Martin's article at The Dependency Inversion Principle clearly demonstrates that it has clear benefits only when a dependency can be supplied from several sources as it moves the code which decides which of those sources to use from inside the program to outside. The key phrase here is "when a dependency can be supplied from several sources", so if I have a dependency which can only ever be supplied from a single source, without the possibility of any alternatives, then the raison d'étre for that pattern no longer exists, in which case I feel perfectly justified in not using it. Yet this simple application of logic does not work with some people, they seem to think that a pattern can be used everywhere, therefore should be used everywhere. I dislike this attitude, and I'm not the only one. In Dependency Injection Objection Jacob Proffitt writes the following:

The claim made by these individuals is that The Pattern (it can be any pattern, but this is increasingly frequent when referring to Dependency Injection) is universally applicable and should be used in all cases, preferably by default. I'm sorry, but this line of argument only shows the inexperience or narrow focus of those making the claim.

Claims of universal applicability for any pattern or development principle are always wrong.

In his article Non-DI code == spaghetti code? the author makes the following observation:

Is it possible to write good code without DI? Of course. People have been doing that for a long time and will continue to do so. Might it be worth making a design decision to accept the increased complexity of not using DI in order to maximize a different design consideration? Absolutely. Design is all about tradeoffs.

It is of course possible to write spaghetti code with DI too. My impression is that improperly-applied DI leads to worse spaghetti code than non-DI code. It's essential to understand guidelines for injection in order to avoid creating additional dependencies. Misapplied DI seems to involve more problems than not using DI at all.

The only design pattern I had in mind before writing my first line of code was the 3-Tier Architecture which I encountered in a prior language before switching to PHP. Later I read about the singleton pattern, but I did not like the implementations which I saw, so I devised one of my own. Some people say that singletons are evil, but I disagree. Other patterns just magically appeared in my code after a bit of refactoring, but that was by accident, not design (pun intended!). Among these are the following:

You may find other patterns in my code, if you look hard enough (such as Class Table Inheritance, Concrete Table Inheritance, Table Module, Active Record and the Transform View), but that's entirely up to you. You may find places where you think I should be using one of your favourite patterns, or where you think that my implementation is wrong, but I would keep those thoughts to yourself because I'm not interested.

Oh yes I am! It's just that I'm not following the same set of best practices as you are. If you took your head out of the sand and looked around you would actually be aware that there is no single document which is universally accepted as "best practice" by all developers. Even if someone had the gall to present one, I'm afraid that you would never get everyone to agree on its contents. If you typed "best practice" into your search engine you would be presented with hundreds of millions of hits, each of which gives a different opinion on what is "best". This is a subject on which there are millions of different opinions, so the idea of a consensus is nothing but a joke, a pipe dream. So if there is no single document, just 100,000,000 alternatives, how do you identify which ones to follow? How do you sort the wheat from the chaff? What happens if I choose a different set of "best practices" from you? Does that automatically make me wrong and you right?

In my long career of working in many different teams for many different organisations and with several different languages I have come across this notion of "common practice" being elevated into "best practice" which everyone else is then obliged to follow. Those who deviate from this "best practice" are automatically branded as deviants or heretics. A particular practice only becomes "common" in a particular group simply because everyone is forced to do it that way. The practice can be several years old and could have been devised by someone of average or even below-average ability. Changes in the capabilities of the language or the skill level of the current programmers is never taken into consideration. The common mantra seems to be we do it this way because we have always done it this way. Before a practice can be elevated from "common" to "best" it should really be evaluated against other practices. "Best" means "better than all the others", but if you do not know of any others then how can you possibly claim that your practice is better than them?

The big problem is that these "best practices" never originated from a single authoritative source, they have been added to and re-interpreted over several decades by any Tom, Dick and Harry with an opinion. This is like trying to combine the recipes from a multitude of different cook books and expecting the result to be a gourmet meal when in fact it is more likely to be a dog's dinner.

There is no such thing as a single unified set of best practices just as there is no single unified programming language, no single unified programming paradigm, no single unified religion or single unified political theory. What is good for one may be god-awful to another. One man's answer is another man's anathema. One man's meat is another man's poison. One man's purity is another man's putrefaction. We are each free to adopt those practices which suit us best, and that is what I will continue to do whether you like it or not.

In his article The Dark Side of Best Practices Petri Kainulainen writes:

When best practices are treated as a final solution, questioning them is not allowed. If we cannot question the reasons behind a specific best practice, we cannot understand why it is better than the other available solutions.

If best practices get in the way, we should not follow them. This might sound a bit radical, but we should understand that the whole idea of best practices is to find the best possible way to develop software. Thus, it makes no sense to follow a best practice which does not help us to reach that goal.

Later in the same article he says the following:

In the end, best practices are just opinions. It is true that some opinions carry more weight than others but that doesn't mean that those opinions cannot be proven wrong. I think that it is our duty to overthrow as many best practices as we can.

Why?

It helps us to find better ways to do our job.

The only "best practices" which I follow are the bare essentials which can be described as being universally applicable such as:

When other people go beyond these bare essentials and attempt to impose additional rules which go down into more and more levels of nit-picking detail then I consider that they have crossed the line and are moving into forbidden territory. They are moving from universally applicable to personal preferences and I find this level of interference more likely to cause friction than to solve real-world problems, so I feel justified in ignoring such petty and ridiculous rules altogether. Examples of such petty rules which I love to ignore are:

Here are some other articles which question the idea of "best practices":

It is often said that arguments about which programming style is best are on the same level as which religion is best as they are both based on bigotry, which is defined in wikipedia as follows:

It refers to a state of mind where a person is obstinately, irrationally, or unfairly intolerant of ideas, opinions, or beliefs that differ from their own, and intolerant of the people who hold them.

If you examine the origins of all the old religions you should see that they all had a common theme, which was to convert chaos into order, to identify what makes a "good citizen". This was done by creating a set of rules or commandments such as "Thou shall not kill", "Thou shall not steal" and "Thou shall not bear false witness". The essence of all these rules can be summed up in a single sentence:

Do unto others as you would have them do unto you.

If you examine the origins of computer methodologies or styles you should see a similar theme - to bring order from chaos, to identify what makes a "good programmer". The earliest set of rules identified such things as "use meaningful names for both functions and variables" and "create a program structure where the flow of logic is easy to follow and understand". The essence of all these rules can be summed up in a single sentence taken from the The Structure and Interpretation of Computer Programs written in 1985 by by H. Abelson and G. Sussman:

Programs must be written for people to read, and only incidentally for machines to execute.

Whilst a religion starts off with a sound and reasonable objective - how to be a good citizen - this is not good enough for some people who say "These rules were handed down to us by <enter deity of choice>. You can only worship <enter deity of choice> through us, you can only have an afterlife if you follow our rules, and to make us more important than we really are we will invent more rules". Thus they invent supplemental and totally artificial rules which dictate how to pray, when to pray, where to pray, what direction you should face while praying, what to wear, et cetera, ad infinitum, ad nauseam. Some of these people become extremists who believe that anyone who does not follow their religion is a heretic who should be punished most severely. It then becomes possible for the followers of a religion to obey the petty and artificial rules, which leads them to believe that they will ascend to heaven, yet they totally ignore the original purpose of that religion which was to help turn them into "good citizens" instead of barbarians. The world is full of religious fanatics who regularly pray to their deity, then go out and kill in the name of that deity. Whatever happened to "Thou shall not kill"?

The world of computer programming, in particular Object Oriented Programming, has also seen the rise of a similar "priesthood", a group of people who love to invent their own sets of supplemental and totally artificial rules for no other purpose than to make themselves appear to be superior in the eyes of the layman, the ordinary programmer. They issue diktats on trivial issues such as tabs vs spaces, camel case vs snake case, where to put curly braces, SOLID principles, the over-use of design patterns, et cetera, ad infinitum, ad nauseam. These people see themselves as the "paradigm police" whose sole purpose is to root out those who are not following their rules and to brand them as heretics. They claim that your software will not have a successful afterlife (i.e. have value in the market place) unless you worship at their alter and follow their rules. It then becomes possible for their followers to obey these petty and artificial rules, which leads them to believe that any software which they produce will automatically be "good". Yet they fail to realise that what they have produced is an over-engineered mess which has too many levels of indirection, which has become fragmented instead of modular, and which makes it far more difficult to read and understand, and therefore more difficult to maintain. This means that they have completely lost sight of the original rules which were supposed to turn them into "good programmers" instead of code monkeys.

Just like the religious fanatics who believe that the only way to get into heaven is to follow their religion, the OO fanatics believe that the only way to produce good software is to follow their version of "best practices". This leads them to believe that any programmer who does not follow their practices cannot produce software which is anything other than crap. This attitude is completely and utterly wrong, and is the product of a deranged mind. Just as it is possible for a person to follow a set of religious practices without being a "good citizen", it is possible for a programmer to follow a set of programming practices and still not produce "good software" which is readable and maintainable. The opposite is also true - it is possible for a person to completely ignore, or be totally unaware of, a particular set of religious practices and still be a "good citizen", and it is possible for a programmer to completely ignore, or be totally unaware of, a particular set of programming practices and still produce "good software".

Unlike religions, where the promise of an afterlife cannot be guaranteed (or even its existence be proved), in software development the "afterlife" is when that software moves from the hands of the developer into the hands of end-user, the paying customer. What matters to the customer is functionality, speed of development, and cost of development. If two pieces of software provide the same functionality, and one was written "properly" to a set of extremist rules, but at twice the cost and twice the time of the other which was written "improperly" to a set of moderate rules, which do you think will be more appreciated by the customer? In the real world it is Return On Investment (ROI) - more bang for your buck - which is the deciding factor, not programming purity. Customers do not (or should not) care about which development techniques were used, just that the result is cost-effective.

Both religious fanatics and OO fanatics rely on too much dogma and too little common sense, which means that they put the following of rules in front of the results which are supposed to be obtained. A pragmatist, on the other hand, will concentrate on the results and ignore any artificial rules which get in the way. A pragmatist will reserve the right to question any rule whereas a dogmatist will not allow his rules to be questioned.

Another problem with dogmatists is that, as well as arguing with pragmatists, they even argue amongst themselves about who's interpretation or implementation is the most pure. They each think that their opinion is the right one, which leads to a lot of Holier-Than-Thou or More Catholic than the Pope arguments.

I am a pragmatist, not a dogmatist, so I put all my efforts into producing the best result that I can instead of blindly following some artificial and restrictive rules and assuming that the result will automatically be the best.

In his article Are You There, God? It's Me, Microsoft Jeff Atwood says the following:

Religion appears in software development in numerous incarnations-- as dogmatic adherence to a single design method, as unswerving belief in a specific formatting or commenting style, or as a zealous avoidance of global data. Whatever the case, it's always inappropriate.

Blind faith in one method precludes the selectivity you need if you're to find the most effective solutions to programming problems. If software development were a deterministic, algorithmic process, you could follow a rigid methodology to your solution. But software development isn't a deterministic process; it's heuristic, which means that rigid processes are inappropriate and have little hope of success. In design, for example, sometimes top-down decomposition works well. Sometimes an object-oriented approach, a bottom-up composition, or a data-structure approach works better. You have to be willing to try several approaches, knowing that some will fail and some will succeed but not knowing which ones will work until after you try them. You have to be eclectic.

Here are some other articles which explore the idea of software development and religious wars:

My critics fail to understand that sometimes I don't follow a particular practice/principle/rule simply because it is not appropriate for the type of applications which I develop. This could either make that practice completely redundant, and thus a violation of YAGNI, or produce a result which is not as optimum as it could be. If I can find a way that is better than your "best" then surely I should be applauded and not admonished.

Note that the sole measurement for judging what is "best" should be "that which produces the best results". This means that the software should be cost-effective so that where several pieces of software have the same effect the one with the lowest cost should always be regarded as being better. Note that "cheaper but less effective" does not qualify. With software development it is the cost of the developers time which is a crucial factor, and the best way to reduce the amount of time taken for a developer to write code is to write less code, which in turn can be achieved by utilising as much pre-written and reusable code as possible. Since the stated aim of OOP is to increase code reuse and decrease code maintenance

then any practice which encourages the production of reusable code should be regarded as being better than any practice which does not. I encourage you to read Designing Reusable Classes which was published in 1988 by Ralph E. Johnson & Brian Foote for ideas on how this can be achieved.

Simply following a series of rules or common/standard practices is not enough on its own. Following rules blindly in a dogmatic fashion and assuming that the results you achieve will be the same as those achieved by others is the path to becoming nothing more than a Cargo Cult Programmer. You have to analyse a problem before you can design a solution, then you need to decide which practices to apply and how to apply them in order to achieve the best results. By "which practices" I mean that some rules or practices may be inappropriate for various reasons. Trying to build a modern web-based database application using practices which were created by people who had little or no experience with such applications is unlikely to set you on the path to success. Most of the rules, practices and principles which I have encountered were written in the 1980s by academics using the Smalltalk language, or something similar, but how many of these people used these languages to write enterprise applications with hundreds of tables and thousands of screens? Also, practices designed for programs using bit-mapped displays are not relevant for modern programs which use HTML forms.

Below are some the rules, principles and practices which I consider to be inappropriate, so I ignore them:

favour composition over inheritancewas devised by someone who didn't know how to use inheritance properly, which creates problems. The solution is to avoid deep inheritance hierarchies and to only inherit from an abstract class. Refer to Composite Reuse Principle for more of my thoughts on this matter.

program to the interface, not the implementationis meaningless as you cannot simply call an interface, you must call a method on an object which actually implements that interface. I have yet to see a code sample which proves that this idea has merit, and until I do I will dismiss it as bogus. If this is supposed to mean calling a method on an unknown object where the identity of that object is not provided until runtime, then as a description of how to use polymorphism is is pretty pathetic.

software entities should be open for extension, but closed for modificationimplies that, once deployed, you should not modify an object but extend it (using "extends" to create a subclass?), which sounds like it creates more problems than it solves. In the life of my framework I have performed numerous refactorings, and if I was forced to put each update in a separate subclass I would also have to change all references of the original class to the updated subclass. Refer to Open/Closed Principle for more of my thoughts on this matter.

depend upon abstractions and not concretionsis vague because there are so many different interpretations of the word abstraction. It took me years to realise that what this meant was to define methods in an abstract class which could then be inherited by numerous subclasses, thus providing polymorphism, which then gives you the ability to swap from one subclass to another at run time. But what if I don't have multiple subclasses? Refer to Dependency Inversion Principle for more of my thoughts on this matter.

each software module should have one and only one reason to change, but "reason to change" was found to be so inadequate and confusing that Uncle Bob had to produce a follow-up article to explain that what he meant was the separation of GUI logic, business logic and database logic. This is, in fact, the same thing as the 3-Tier Architecture which is less confusing to implement as it has a more precise and less ambiguous definition.

It should be obvious to every OO programmer that shared method names offer polymorphism while unique method names do not. Polymorphism provides the opportunity for more reusable code as it enables Dependency Injection.

it should not be possible for an object to exist in an inconsistent statewhere the word "state" is mistakenly taken to mean the data within an object when it actually means the condition of an object. The ONLY absolute rule regarding constructors is that after being executed the constructor should leave the object in a condition which will allow any of its public methods to be called. My full response to this schoolboy mistake can be found in Re: Objects should be constructed in one go.

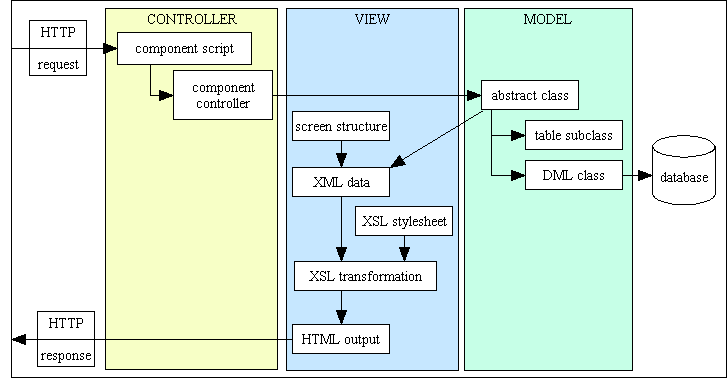

PHP was created to make it easy to create dynamic web applications, those which have HTML at the front end and an SQL database at the back end. I was involved in writing enterprise applications (database applications for commercial organisations) for 20 years before I switched to using PHP, so I knew how they worked. I had even created frameworks in two of those languages. All I had to do was to convert my latest framework to use PHP and the OO features which it offered in order to create as much reusable software as possible. The structure of my RADICORE framework can be pictured in Figure 4 below:

Figure 4 - A combination of the 3-Tier Architecture plus MVC

There is also a more detailed version available in Figure 1. This shows that the RADICORE framework uses a combination of the 3 Tier Architecture, with its separate Presentation layer, Business layer and Data Access layer, and the Model-View-Controller (MVC) design pattern. The following amounts of reusability are achieved:

Note also that any Controller can be used with any Model (and conversely any Model can be used with any Controller) because every method call made by a Controller on a Model is defined as a Template Method in the abstract class which is inherited by every Model. This means that if I have 45 Controllers and 400 Models this produces 45 x 400 = 18,000 (yes, EIGHTEEN THOUSAND) opportunities for polymorphism and therefore Dependency Injection.

I was able to produce a single View module which can produce the HTML output for any transaction as a result of my choice to use XSL Transformations and a collection of reusable XSL Stylesheets. This is coupled with the fact that I can extract all the data from a Model with a single call to $object->getFieldArray() instead of being forced to use a separate getter for each column, as discussed in Getters and Setter are EVIL.

There are some people who seem to think that as soon as a new feature becomes available in the language then all developers should immediately rush out and refactor all of their code for no good reason other than to appear current, fashionable, and not behind the times. They fail to understand that most new features are introduced in order to solve a particular problem, but if your code does not have that particular problem then it does not have any need for that particular feature. Also, as a fully paid-up member of the if it ain't broke, don't fix it brigade, I find it more cost-effective to spend my time on things that need my attention (read: earn me more revenue) than things that don't.

As a prime example take the introduction of autoloaders. The problem which this feature addresses is described in the opening paragraph as follows:

Many developers writing object-oriented applications create one PHP source file per class definition. One of the biggest annoyances is having to write a long list of needed includes at the beginning of each script (one for each class).

Not only do I never have to write a long list of includes at the beginning of each script, I never have to write any include statements at all, so I have absolutely nothing to be annoyed about. I don't have this problem, so I don't need this solution. While I do have some include/require statements scattered around my framework, some of them are for files of procedural functions, not classes, so they cannot be covered by autoloaders and are therefore irrelevant. When I generate application components using my framework I never have to write a single include/require statement as they are already built into the framework's components. Should I need to access an additional object then I use my singleton class which makes use of the old fashioned include_path directive.

This is not good enough for the paradigm police. They seem to think that autoloaders were introduced with the sole purpose of replacing every include/require statement, so wherever my code uses an include/require statement then I should feel obligated to immediately remove it and use an autoloader instead. This would require refactoring my code for no obvious benefit, so I cannot justify the effort required to undertake such a pointless exercise.

Another example is the use of namespaces which some people are now saying should be added to the PHP core as well as being a necessity within every PHP application. These people cannot read otherwise they would see that this would be a violation of YAGNI. The reason for the addition of this feature is clearly stated in the manual as follows:

In the PHP world, namespaces are designed to solve two problems that authors of libraries and applications encounter when creating re-usable code elements such as classes or functions:

- Name collisions between code you create, and internal PHP classes/functions/constants or third-party classes/functions/constants.

- Ability to alias (or shorten) Extra_Long_Names designed to alleviate the first problem, improving readability of source code.

Note that the primary audience is stated as authors of libraries

whose code may be plugged into an unknown application containing unknown source code with an unknown naming convention. To an intelligent person this should automatically exclude the following:

So why change your code to take advantage of a feature when that feature is inappropriate and unnecessary? That would not appear to be the action of an intelligent person. This topic is also discussed in Is there a case for adding namespaces to PHP core?

How many of you, when the short array syntax was introduced in version 5.4, immediately rushed out and refactored your code to replace all instances of the "old" syntax with the "new" alternative? According to the paradigm police you don't have a choice in the matter - a new feature has become available, so you are obliged to use it whether it has any benefits or not.

I personally find this attitude quite childish. I earn my living by selling licences for my enterprise application to large corporations, and by providing customisations, support and training, so once I have written a piece of code and released it to my customers then I don't look at it again until I have a very good reason, such as to fix a bug or include an enhancement. Unnecessary refactoring is not the kind of work where I can pass off the costs to my customers as there would be no visible benefit. If I have the choice between doing unnecessary work that earns me nothing and working on a client's project which is going to pay me tens of thousands of pounds, then which one do you think I would put first?

I am not the only one who thinks that using the latest features in the language simply because they are shiny and new may not actually be a good idea. This is known as the Magpie Syndrome and is discussed by Matt Williams in his article as well as Does Your Team Have STDs?

I write code which is functional, not fashionable. Fashions come and go with alarming regularity, but software which performs useful functions will always have a market place. Customers are more appreciative of software which performs useful functions and do not care one jot about the style in which it was written. When it comes to writing software which ticks all the right boxes I'm afraid that the views of the paying customers have greater priority with me than the views of the "paradigm police".

When my critics hear that, rather than being a relative newcomer to the programming world, I spent several decades working with other languages such as COBOL and UNIFACE before switching to PHP in 2002, they immediately accuse me of being a dinosaur who is stuck in the past, or a Luddite who is resistant to technological change. The facts say otherwise. My first encounter with a web application was a complete disaster as UNIFACE was not designed for web development, so what did I do? A true Luddite would have stuck to the old technology and resisted the new, but I did the exact opposite. I taught myself HTML and CSS to see what it could do, then searched for a language which was built for web development and was easy to learn. I chose PHP, and this is a choice that I have never regretted. I encountered XML while working with UNIFACE, and I learned of XSL transformations, but whereas UNIFACE could not generate web pages using XML and XSL I quickly learned that PHP could, so I rebuilt my UNIFACE framework in PHP and got it to produce all its web pages from a library of reusable XSL stylesheets. My framework started with Role Based Access Control, but then I added in Audit Logging and an activity based Workflow system. I later designed and built a Data Dictionary which included the ability to generate class files and user transactions from my catalog of Transaction Patterns.

So rather than resisting a change in technologies I actually embraced it and redeveloped my old framework so that I could continue to develop enterprise applications, but in the new way. Your complaint is actually something else entirely - it concerns the techniques which I have used to implement the new technologies and not the technologies themselves. You are acting on the erroneous assumption that "there is only one way to do it, and I'm going to tell you what that is". Programming is an art, not a science. It relies on a person's creativity and not the blind following of sets of pre-conceived rules. If you give the same problem to 100 different programmers you will get 100 different solutions. Some of the differences may be small, but others could be huge. Why is this? Each of us is an individual, with an individual way of thinking. We can look at the same problem and perceive it in different ways, which leads us to devise different solutions which we then try to implement in our own individual ways. By telling me that I must not be different you are attempting to stifle my creativity in order to maintain the status quo, in order to protect your ideas from being revealed as not the best after all. Progress cannot be made by doing the same old thing in the same old way. Progress is made through innovation, not imitation, and the first step on the road to progress is to try something different.

So stop telling me that I am not allowed to be different as your words are falling on deaf ears.

The following are from an article which I wrote in December 2003 called What is/is not considered to be good OO programming:

The following are from an article which I wrote in November 2004 called In the world of OOP am I Hero or Heretic?:

The following are from an article which I wrote in December 2004 called Object-Oriented Programming for Heretics: