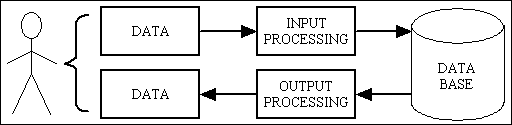

Figure 1 - A Data Processing System

This article is about writing database applications using Object Oriented Programming (OOP). I have been designing and building database applications since the late 1970s/early 1980s. My first programming language was COBOL followed by UNIFACE, but in 2002 I decided to switch from desktop applications to web applications for which I chose PHP. This was also the first language I had used with Object Oriented (OO) capabilities. When I rebuilt by development framework in PHP using its OO features I found the transition very easy and the results were better than I expected.

I have noticed that there is a huge difference between my approach and the approach that is being taught in numerous online articles, tutorials and books. My many critics keep telling me that my approach is so wrong that it borders on the heretical. This criticism can be boiled down into the following statements:

Bad software is difficult to read, difficult to maintain, difficult to enhance, full of bugs, does not satisfy user requirements, is not cost-effective, et cetera, ad infinitum, ad nauseam. People who have actually used my RADICORE framework to build database applications will tell a different story. I myself have used it to build several applications, and I can tell you that it works, it works very well, and has done for over a decade

This leads me to believe that what is being taught today as OO theory is nothing more than a pile of pooh which is preventing whole generations of newbies from becoming competent programmers. If there are problems with putting a theory into practice then you should be prepared to examine both the theory itself and not just the way in which it is being implemented. I judge the efficacy of my work on its ability to produce results which satisfy my paying customers and not how well it follows a bunch of artificial rules and satisfies a bunch of dogmatic box tickers. If I can produce a better result by breaking a rule then I will do so. After all, if a rule is supposed to promote "good" software and I can still produce good software after ignoring that rule, then what value does that rule really have?

Too many of today's OO advocates are operating under the illusion that their precious OO theory is at the center of the universe and that everything else revolves around it, or is a mere "implementation detail". This is pure bunkum of the highest order. I designed and built many database applications in the 20+ years before I switched to an OO-capable language, and I can tell you quite categorically that in such applications it is actually the database design which is the center of the universe and that everything else revolves around it. In such applications the database is king and it is the software which is an implementation detail. Get the database design wrong and you have an uphill battle trying to produce the right results. Get the database design right, and structure your software around that design, and writing the software will be a walk in the park. Been there, done that, bought the t-shirt. This sentiment is echoed in the following quote:

Smart data structures and dumb code works a lot better than the other way around.Eric S. Raymond, "The Cathedral and the Bazaar"

When writing a software application, regardless of its type, the code will be interacting with something outside of itself. This "something" may be a physical device, either mechanical or electrical, it may be an image file or a sound file, or it may be a database. Whatever this "something" is, It is important to note that the software should be modelled on it so that it can interact with it as logically and simply as necessary. For example, you would not take the design for a building's elevator control system and use it as the design for an avionics system inside an aircraft. The design of the solution should be aligned around the problem, otherwise you will always have an uphill struggle.

The problem in the current world of OO is that everyone is taught that, regardless of the type of application being built, the software must ALWAYS be designed using the principles of Object-Oriented Design (OOD). This is supposed to be because it enables you to model the real world

. This is an interesting idea, but like most of the concepts in OOP it can be mis-interpreted and mis-applied in ridiculous ways. The article Don't try to model the real world, it doesn't exist contains the following observation:

It is not possible for anyone to model the actual real world, only their perception of the real world.

You should also remember that you never model the whole of the real world, only those bits which are necessary to complete the task in hand.

Anyone who has ever worked with databases will tell you that the database itself must be designed using the principles of database normalisation, which then forces the unwitting application designer to use two different design methodologies for the same application - one for the database, and another for the software which accesses that database. This always leads to a condition known as Object-Relational Impedance Mismatch for which the universal cure is an abomination called an Object-Relational Mapper (ORM). I do not use OOD, I do not have the mismatch problem, so I don't need an ORM.

I was not aware that OOD existed when I started to write programs using the principles of OOP, so I never wrote software which hid the fact that it was communicating with a relational database. I write enterprise applications which do nothing but communicate with databases, and these applications contain thousands of tasks (user transactions) each of which does something to one or more database tables in order to achieve its result. Each task is therefore designed and developed around the fact that it is required to perform actions on one or more database tables, and the best result is achieved by ignoring completely the concepts of OOD and such things as the SOLID principles and write code which knows that it is talking to database. I wrote my RADICORE framework specifically to help me build and run database applications, and it does not matter how many tables are in the database or what they represent - they are all database tables which can be accessed in exactly the same way. This is why each of my 450+ concrete table classes inherits so much reusable code from my abstract table class. This is why the Transaction Patterns I use on one table can be reused on other tables - the operations are identical, it is just the tables and their contents which are different.

In my early days as a programmer I was exposed to various sets of programming standards which varied in quality from "workable" to "sub-standard". Each organisation had its own standards, and sometimes different teams within the same organisation had their own standards. This prompted me to write Development Standards - Limitation or Inspiration?. I started to document my own personal standards which, in the light of actual experience, avoided the mistakes made by others. When I later became a team leader in another company my personal standards were adopted as the official company standards. The whole team was asked to vote on the standards, and mine were chosen by common consent.

When I became team leader I also had the opportunity to begin creating libraries of reusable code. Until that point my only option had been to copy and paste whole sections of code from one program to another as nobody in the team either recognised the need for such libraries or knew how to build and maintain them. These libraries were documented in Library of Standard Utilities and Library of Standard COBOL Macros.

This set of libraries was upgraded to a framework in 1985. This happened because a client asked for a system with dynamic menus instead of static ones, as well as a method of enforcing role based access control. In order to implement this I had to design a MENU database as well as a set of maintenance programs. I completed my design in a few hours one Sunday afternoon, and by Friday I had a working implementation. I later rewrote this framework in UNIFACE and again in PHP for which I also produced a structure document. If you don't know the different between a "library" and a "framework" then please read What is a Framework?

While working with UNIFACE I was introduced to XML documents and XSL Transformations, but although it was possible to transform XML documents into HTML pages the authors of UNIFACE chose to use their own proprietary mechanism which I felt was too clunky and less flexible.

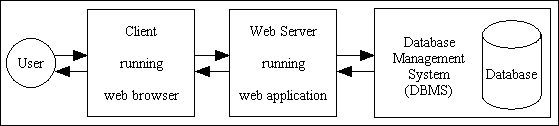

With COBOL all development was done using the 1-Tier Architecture in which the user interface, business logic and data access logic were all contained within a single component. We did not have the entire application in a single program, we had a separate component for different areas of the application. When I switched to UNIFACE v5 I was introduced to the 2-Tier Architecture in which all data access logic was split off into a separate component which was part of the language. This enabled the DBMS to be easily switched from one to another without having to change any business logic. When UNIFACE version 7.2.06 was released this was upgraded to the 3-Tier Architecture as it supported separate components for the Presentation (UI) layer and Business layer. A single component in the Business layer could be shared by any number of components in the Presentation layer. The built-in Data Access component was a blessing because developers did not need to write any code to access the database, but it was also a curse as it could only generate simple queries without JOINs. If anything complicated was needed it meant either using a database view or a stored procedure.

By "database application" I mean an application whose sole purpose is to allow one or more users to interact with the contents of a database. Such applications may also be known as enterprise applications as they are business-facing web applications rather than public-facing web sites, and are usually concerned with the manipulation of business data for organisations, both large and small, for such topics as sales order processing, purchase order processing, accounting, invoicing, payroll, inventory, shipments and such. This excludes software such as that which is embedded in or interfaces with hardware objects, or which deals with games, video/audio manipulation, compilers, operating systems and device drivers.

Having written database applications for 20+ years before using an OO-capable language I was familiar with database design and data normalisation. I also learned about Structured Programming and, courtesy of Michael A Jackson, that the software structure should follow the database structure as closely as possible. Imagine my surprise when I was told that "proper" OO requires the use of OO design and that the database design can be dismissed as merely an "implementation detail".

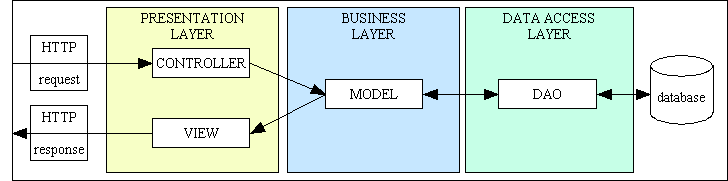

In the early days the business of software was referred to as Data Processing (DP) instead of the more modern Information Technology (IT), and what we wrote were called Data Processing Systems. Bearing in mind that in this context a system is something which takes input and processes it in some way to produce output, a data processing system can be represented by the diagram in Figure 1:

Figure 1 - A Data Processing System

In this example a user (person) enters data into the system using electronic forms on an input device, and that data is processed in some way before it gets stored in a database. Data can also be extracted from the database, then processed in another way before it gets displayed on the user's device. In the early days the software applications were compiled and run on a server, and communicated with the user via green screen or dumb terminals which operated in either character mode or block mode. Modern systems use intelligent PCs as their monitors which allows for the following possibilities:

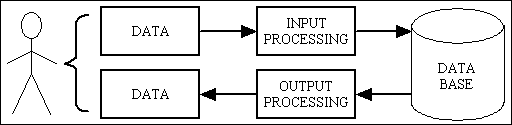

Figure 2 - A Web Application

It is also possible for the human being to be replaced by another computer system which can access the application via web services. This involves the transmission and receipt of documents in either XML or JSON format which have superseded the earlier EDI formats such as EDIFACT.

In my long career I have dealt with such storage systems as flat files, indexed files, Hierarchical databases (such as Data General's INFOS), Network databases (such as Hewlett Packard's IMAGE/TurboIMAGE), and Relational databases such as MySQL, PostgreSQL, Oracle and SQL Server. I am therefore no stranger to the concept of Data Normalisation and Entity-Relationship (ER) models.

If you look at Figure 1 you will see that at one end of the "system" is a user with a device which displays data using some sort of forms-based mechanism (such as compiled GUIs or HTML pages), while at the other end the data is stored (or persisted) in a mechanism known as a database. In between the two sits the software application which deals with the data as it flows between the two ends, and deals with any business or formatting rules on the way. The language and/or paradigm which is used to create this software should be completely irrelevant as the results - what is shown to the user and what appears in the database - should always be the same.

In my early days it was quite common to produce software as a series of components or modules which could be worked on separately, and which could be linked together to form a whole application. Each of these modules contained the code for dealing with the user interface, business rules, and database access logic in a single unit, but while working with UNIFACE I was exposed to the 2-Tier Architecture and then then 3-Tier Architecture which splits the code into separate parts, also known as layers or tiers, where each part dealt with a separate area of logic. This structure is shown in Figure 3:

Figure 3 - the 3 Tier Architecture

After using this this structure for a while its benefits became immediately obvious to me, which is why I chose to continue using it when I switched to a different language.

When I came to learn OOP in late 2001 and early 2002 the resources which were available on the internet were very small in number and far less complicated. All I had to go on was a description of what made a language object oriented in this Wikipedia article from October 2001 which stated the following:

Object Oriented Programming (OOP) is a software design methodology, in which related functions and data are lumped together into "objects", convenient metaphors, often mirroring real world things or concepts.

To do OO programming you need an OO-capable language, and a language can only be said to be object oriented if it supports encapsulation (classes and objects), inheritance and polymorphism. It may support other features, but encapsulation, inheritance and polymorphism are the bare minimum. That is not just my personal opinion, it is also the opinion of the man who invented the term. In addition, Bjarne Stroustrup (who designed and implemented the C++ programming language), provides this broad definition of the term "Object Oriented" in section 3 of his paper called Why C++ is not just an Object Oriented Programming Language:

A language or technique is object-oriented if and only if it directly supports:

- Abstraction - providing some form of classes and objects.

- Inheritance - providing the ability to build new abstractions (classes) out of existing ones.

- Runtime polymorphism - providing some form of runtime binding.

So, according to those experts, a computer language can only be said to be Object Oriented if it provides support for the following:

| Class | A class is a blueprint, or prototype, that defines the variables (data) and the methods (operations) common to all objects of a certain kind. Can be instantiated into an object and extended to form a new class. |

| Object | An instance of a class. A class must be instantiated into an object before it can be used in the software. More than one instance of the same class can be in existence at any one time. |

| Encapsulation | The act of placing data and the operations that perform on that data in the same class. The class then becomes the 'capsule' or container for the data and operations. This binds together the data and the functions that manipulate the data.

More details can be found in Object-Oriented Programming for Heretics |

| Inheritance | The reuse of base classes (superclasses) to form derived classes (subclasses). Methods and properties defined in the superclass are automatically shared by any subclass. A subclass may override any of the methods in the superclass, or may introduce new methods of its own.

More details can be found in Object-Oriented Programming for Heretics |

| Polymorphism | Same interface, different implementation. The ability to substitute one class for another. By the word "interface" I do not mean object interface but method signature. This means that different classes may contain the same method signature, but the result which is returned by calling that method on a different object will be different as the code behind that method (the implementation) is different in each object.

More details can be found in Object-Oriented Programming for Heretics |

| Abstraction | The process of separating the abstract from the concrete, the general from the specific, by examining a group of objects looking for both similarities and differences. The similarities can be shared by all members of that group while the differences are unique to individual members. The result of this process should then be an abstract superclass containing the shared characteristics and a separate concrete subclass to contain the differences for each unique instance.

More details can be found in Object-Oriented Programming for Heretics |

But why make the switch from procedural to OO programming? What are the benefits? After reading a few more articles on the internet I came across various descriptions which I summarised into the following:

Object Oriented Programming is programming which is oriented around objects, thus taking advantage of Encapsulation, Inheritance and Polymorphism to increase code reuse and decrease code maintenance.

Since those early days a lot of people have added to the definition of OOP, but as far as I am concerned all these additions are nothing but optional extras, and as I can find no practical use for any of them I choose to ignore them. This upsets a lot of purists who seem to believe that by not incorporating all these optional extras into my applications that I am not following "best practices", not obeying the "rules", that I do not understand OOP, that I am not a "proper" OO programmer, that my work must therefore automatically be inferior. What a load of rubbish! I write code which produces results, not follows an arbitrary set of artificial rules. I write code to please my paying customers, not to impress my fellow developers. I do not write code using any of these "optional extras" simply because I can write effective software without them. I have looked into using some of them, but as they would not add measurable value to my code I have to ask myself "why bother?"

The simple answer is that Too Many Cooks Spoil the Broth. Once you get an ever-expanding group of people deciding on how something should be done, such as deciding on a particular recipe, you will always get multiple and different opinions, with the person with the loudest voice/biggest mouth coming out on top. This is different from Many Hands Make Light Work which refers to implementing a design decision. Getting a hundred men to dig a trench is easy, but getting a hundred men to decide where to dig the trench, and how deep and how wide it should be, is a different matter altogether.

While the original concept of OO was perfectly sound, over the years many different "cooks" have added their personal opinions to the recipe and turned it into a humongous mess. It is interesting to note that the languages which were originally used to demonstrate OO concepts, such as SIMULA and SMALLTALK, never made it as mainstream languages. This would indicate to me that their implementations may have been theoretically pure, but were not accepted for practical reasons. The first object-oriented language to be widely used commercially was C++ which was created by starting with the already widely-used C language, which was procedural, and adding in OO capabilities. The original version was called "C with classes".

While this procedural language was enhanced with the addition of encapsulation, inheritance and polymorphism, other features that already existed in the language, or were added later, were taken by some programmers to be part of the OO paradigm and therefore considered to be essential to OO programming. These include the following:

Other OO languages, such as Java, were developed from scratch, but the ability to write procedural code was removed with the idea that everything is an object. Other features were added on top of encapsulation, inheritance and polymorphism, and it has been assumed by too many developers that these additional features are essential in order to do OOP properly. In my opinion they are not essential, they are nothing more than optional extras.

It is interesting to note that Alan Kay, who invented the term "Object Oriented" had this to say about these implementations of his idea:

Having spent several decades writing database applications for the enterprise with non-OO languages, when I wanted to switch to providing such applications for the web I looked for an easy-to-use yet popular web-capable language. I did not like the look of Java, so I chose PHP. I have never regretted this choice. I started with PHP 4 which was based on a successful procedural language, but which included full support for the essential characteristics of OO which were (and still are) nothing more than Encapsulation, Inheritance and Polymorphism.

There many different languages now available which provide support for OOP. They are different simply because they were designed by different people with different objectives in mind. There is no such thing as a "one size fits all" language, which also means that there is no such thing as a "one size fits all" implementation of OOP. Some languages are compiled while others are interpreted. Some languages are multi-purpose while others have only a single purpose. Some languages are statically typed while others are dynamically typed. Java, for example, is multi-purpose, compiled and statically typed while PHP is limited to web applications, interpreted and dynamically typed. Because these languages are different they tend to do similar things in different ways with different syntax. Some things which are necessary in some languages are either optional or non-existent in others.

One of the most disturbing things I have noticed with the PHP language from version 5 upwards is that new OO features have been added for no reason other than "other languages have X, so PHP should have X too". These people fail to realise that their previous language may have included feature X either because some bright spark thought that it would be clever, it would save a few keystrokes, or because it solved a problem that existed in that particular time with that particular language. A typical example of this is object variables which were devised to get around a problem with compiled programs and slow processors in the 1980s which no longer exists in the 21st century. PHP 4 did not support interfaces, so they were not important for OOP, yet they were added in PHP 5 to placate the OO purists even though they are totally unnecessary. This is what Rasmus Lerdorf, who invented PHP, had to say about this on the internals list:

Rather than piling on language features with the main justification being that other languages have them, I would love to see more focus on practical solutions to real problems.

Here is a quotation taken from the Revised Report on the Algorithmic Language Scheme.

Programming languages should be designed not by piling feature on top of feature, but by removing the weaknesses and restrictions that make additional features appear necessary.

I firmly believe that programming is an art, not a science, which means that a person must have the right artistic talent or aptitude to begin with otherwise they are wasting their time, they are flogging a dead horse, they are barking up the wrong tree. This means that it is simply not possible for an artist to document what he does in such a way that he can pass on that talent to an non-talented individual. Yet there are too many people out there who seem to think that they can do just that, which is why they try to break down the art of programming into a series of "rules", "principles" and "best practices". They seem to think that they can reduce artistic skill to a series of items on a sheet of paper which can be ticked off one by one, and if they come across something which does not tick all the right boxes they automatically assume that it is faulty or incorrect. The opposite is also true - if they come across something which does tick all the right boxes, which does follow their "rules" or "principles", then they automatically assume that it must be correct. This is pure fallacy. This sentiment was echoed in the blog post When are design patterns the problem instead of the solution? in which T. E. D. wrote:

My problem with patterns is that there seems to be a central lie at the core of the concept: The idea that if you can somehow categorize the code experts write, then anyone can write expert code by just recognizing and mechanically applying the categories. That sounds great to managers, as expert software designers are relatively rare.

The problem is that it isn't true. You can't write expert-quality code with only "design patterns" any more than you can design your own professional fashion designer-quality clothing using only sewing patterns.

As far as I can see the programming world is populated by two kinds of artist - true artists and con artists. A true artist is able to describe what he does in simple terms, whereas a con artist will try to dress it up in flowery language in order to disguise his lack of understanding and/or ability. These are members of what I call the Let's Make It More Complicated Than It Really Is Just To Prove How Clever We Are brigade. The trouble is that a statement or concept which starts off in a simple and unambiguous form can be reprocessed by a series of these con artists and end up looking nothing like the original. This is similar to the game of Chinese Whispers. If you look at Abstraction, Encapsulation, and Information Hiding by Edward V Berard of the Object Agency you will see where different authors have published different descriptions for certain basic OO concepts, but by using different words they have produced different interpretations which, after numerous iterations and re-interpretations, eventually end up as mis-interpretations and mis-representations. Instead of offering clarification they have muddied the waters. Instead of keeping things simple they have added unnecessary layers of complexity.

This leads me to believe that my implementation of OOP has been more successful simply because I started off with the original, basic and valid descriptions of OOP and was not distracted or led astray by the numerous mis-interpretations which followed, mainly because I did not know that so many mis-interpretations actually existed and had never read them. Now that I do know, I can dismiss them as the work of charlatans and con artists because my decades of experience have shown me that I have been able to use the bare-bones principles of OOP - that of encapsulation, inheritance and polymorphism - to meet the objectives of OOP - to increase code reuse and decrease code maintenance.

Now look at the following quotes from various wise men:

1. Some people know only what they have been taught, while others know what they have learned.

2. Experience is not what you've done, but what you've learned from what you've done.

3. To effectively apply practices, you need to understand the principle, but to understand the principles, you need to practice!

4. Experience helps to prevent you from making mistakes. You gain experience by making mistakes.

My path to OOP has been comprised of the following:

Now compare that with the path of today's novice programmer:

Jacob Gabrielson had this to say in his article How poopy is YOUR code?:

The problem is that programmers are taught all about how to write OO code, and how doing so will improve the maintainability of their code. And by "taught", I don't just mean "taken a class or two". I mean: have it pounded into their heads in school, spend years as a professional being mentored by senior OO "architects" and only then finally kind of understand how to use it properly, some of the time. Most engineers wouldn't consider using a non-OO language, even if it had amazing features ... the hype is that major.

If you think of the contents of a programming language - its syntax, expressions, operators, control structures and functions - as being similar to the contents of a children's construction set, you should see that they both consist of tiny building blocks or component parts which can be assembled in a multitude of different ways in order to produce a variety of different results. While each component has a particular function and may have certain rules when it comes to interacting with other components, when it comes to assembling them in order to produce something there are absolutely NO rules when it comes to how that "something" may be assembled. This is entirely up to the imagination of the budding constructor and is NOT limited by the imagination of the person who devised the construction set. By telling their students that there is only one way to assemble the language components into a finished piece of software the teachers are overstepping the mark and actually failing in their duty:

The result of this substandard teaching is a bunch of Monkey See, Monkey Do programmers who know nothing except Cargo Cult Programming. These people will become expert in writing applications that fail, that go over budget, that are monsters to develop and monsters to maintain.

When I write software I only allow myself to be constrained by three things:

I refuse to be constrained by the limitations of someone else's intellect as that would be like going back to a bygone age. Progress only comes from innovation, not imitation, which means that trying different methodologies or techniques should be encouraged and not discouraged. Those who criticise me for being "different" are therefore like Luddites who resist the march of progress.

If you have ever heard the expression learn to walk before you run you should realise that it would be best for students to learn how to write software using the bare essentials before trying and evaluating each of those optional extras. I emphasise "and evaluate" because each of those optional extras should be individually tested in order to see what effort is required to implement them and what benefits, if any, are actually obtained. It is not sufficient to use a feature "because it is there", it should only be used if it is actually proven to add value to the result.

In his article Talkers and Doers Chris Baus identifies two types of people in the world of software:

In this article he points out the following:

Software isn't about methodologies, languages, or even operating systems. It is about working applications.

...

As an application developer, when you evaluate a new tool or technique, you should always ask yourself, "How can this make my application better, or help me develop it more quickly?" If you can't quantify the advantages of a tool, your time is probably better spent actually building software.

I have followed that advice by creating working software using the simplest of techniques, yet I am constantly being berated for being "too simple" and for not following the advice given to me by all these architecture astronauts. They tell me that my software must be wrong because it's breaking all their rules, but they fail to realise that it works (and sells!), therefore it cannot be wrong.

An open-minded person would assess the validity of a different interpretation by comparing its results with what they have produced themselves. But my critics are not open minded. They are dogmatists who believe that what they have been taught is the "only way, the one true way" and that anybody who strays from the path of righteousness is a deviant and a heretic. Such people then struggle to combine the concepts of OOP and database theory because they do not realise, or do not accept, that database theory is superior to OO theory every day of the week. When they see what I have done they don't say "It's different, but it works, therefore it cannot be wrong". Instead they say "It's different, but as it is not allowed to be different it must be wrong".

When I came to build my new framework in PHP I had three particular objectives in mind:

After downloading and installing PHP onto my home computer I quickly ascertained that PHP was perfect for the job. It was purpose-built for web applications, was already widely used, was easy to learn due to the large number of online resources available, and it was open source and therefore free to use. I soon discovered how easy it was to write the code to deal with creating XML documents and performing XSL transformations, so I felt that I was heading in the right direction.

When it came to the 3-Tier Architecture I knew that the PHP language did not contain any functions which mentioned that architecture, so it was all down to how I split up my code.

After reading the PHP manual and how it supported OOP I could see how to deal with encapsulation, inheritance and polymorphism, so I started to write the code to deal with my first database table. The first thing that I noticed was that OOP automatically forces you to use at least two components - a class which contains methods and properties, and another component which instantiates that class into an object, then calls various methods on that object in order to obtain a result. I could immediately see that one of these two components would sit comfortably in the Presentation layer while the other would sit in the Business layer. I was not bothered about creating a separate Data Access Object at this point as I first wanted to create code that worked before I split it off into its own component.

Why did I start by creating a class to deal with a single database table instead of a group of tables? Why not? My decades of experience had taught me that an enterprise application consists of large numbers of database tables each with their own set of columns, then has large numbers of user transactions or tasks each of which does something with one or more of those tables. The idea of creating a class which was not directly associated with a single database table never entered my mind as I not could see any logical reason for it. In a database each table is a separate entity in its own right, and there is no such concept as a group of tables (except for grouping tables in different databases). There is no concept such as being forced to go through TableA to get at TableB. As far as I am concerned when I am writing a database application I am writing software which interacts with objects in a database, not physical objects in the real world, and database objects are called "tables". If a table holds data about a real-world object called "Person" I do not concern myself with the properties and methods of a real-world person as I am only interested with the properties and methods that apply to the "Person" table. Every object in the database is a table regardless of what real-world entity it represents. Every table has properties which are called "columns", and every table shares a standard set of operations named INSERT, SELECT, UPDATE and DELETE (often referred to as CRUD), so in my application I have a software object (class) for each table, and each table shares the same method names. What could be more logical than that?

You should also be aware that in the real world there are two types of object:

Regardless of whether a real-world object is active or inactive, alive or inert, in the database it is just a table, and can be operated on just like every other table. It cannot do anything by itself, it cannot generate requests, it can only respond to requests from external sources.

I also avoided the mistake of having the Presentation layer component having nothing but a call to a single $object->execute() method and having the Business layer component do everything itself. Why did I do this? Because it violated the reasoning behind the 3-Tier Architecture.

When I created the methods for this table class it was also a no-brainer for me. Anyone who knows how databases work knows that there are only four operations that can be performed on a table - Create, Read (Select), Update and Delete - which are commonly referred to as CRUD. I did not make the mistake that I have seen so many others make by having separate methods to load, validate and store the data - I have a single insertRecord(), getData(), updateRecord() and deleteRecord() method which calls a series of sub-methods each of which performs a separate processing step. You might understand this better by looking at some UML diagrams.

When I created the properties for the table class I avoided the practice of having a separate property for each column. I noticed in my early testing that when data is sent in from the client device that it is presented in the form of the $_POST variable which is an associative array. I also noticed that when reading data from the database that each row is presented as an associative array. I liked the array processing in PHP, so as it was just as easy to access column data in an array I saw no reason to split either of those arrays into separate variables. That is why each table class has a single variable called $fieldarray instead of a separate variable for each column. This means that I don't have any setters (mutators) or getters (accessors) for individual columns, and this helps me achieve loose coupling which is supposed to be an important objective in OOP. Having a single array variable to hold row data also made it possible to extend it to an indexed array of associative arrays in order to handle data for more than one row at a time. Some people think that this is not allowed, but as that rule does not exist in my universe I ignore them.

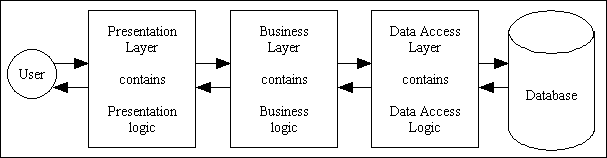

It was also obvious to me that in order to implement the 3-Tier Architecture all code to format the data into HTML belonged in the Presentation layer, not the Business layer. This then meant that the table class did nothing but supply the calling class with raw data, and it was up to the calling class to transform that data into HTML. This I achieved by creating a single reusable component which turned the raw table data into XML, then transformed it into HTML using an XSL stylesheet. Because I then had three components, each of which performed a separate part of the processing, this prompted a colleague to remark that what I had done was provide an implementation of the Model-View-Controller design pattern. After reading a description of this pattern I could see that this was indeed true. This combination of the 3-Tier Architecture and the MVC design pattern resulted in the structure shown in Figure 4:

Figure 4 - The MVC and 3-Tier architectures combined

As soon as I discussed this implementation in a newsgroup I was subjected to the usual criticism and verbal abuse, which, as usual, failed to persuade me to change my heretical ways.

I also created a separate script for each user transaction (list, search, create, read, update and delete) rather than having a single script which could perform all of those transactions. This was because of previous experience which showed the benefits of single-purpose modules instead of multi-purpose modules.

I also avoided using a Front Controller as I had no idea that such a stupid notion existed outside of compiled languages.

After writing the code which dealt with the first database table I then set about creating the scripts which did exactly the same thing for the next database table. The phrase did exactly the same thing

should instantly signal to a wide-awake programmer that perhaps a reusable pattern could be emerging. To start with I took the first table class, copied it, then manually went through it and changed all the hard-coded references from table1 to table2. Although the code worked I could see that there was a huge amount of duplication, so I set about creating code that could be reused instead of duplicated. The recognised mechanism for reusing code between different classes is inheritance, so I created an abstract table class which was then inherited by each of my concrete table classes. I then moved all duplicated code from the concrete class into the abstract class, then tested each script to make sure that it still performed its function. When I finished this exercise I discovered that each of my concrete table classes consisted of nothing but a class with a constructor which could be built from the following template:

Code sample #1 - Turning the abstract class into a concrete class

<?php require_once 'std.table.class.inc'; class #tablename# extends Default_Table { // **************************************************************************** // class constructor // **************************************************************************** function __construct () { // save directory name of current script $this->dirname = dirname(__file__); $this->dbname = '#dbname#'; $this->tablename = '#tablename#'; // call this method to get original field specifications // (note that they may be modified at runtime) $this->fieldspec = $this->loadFieldSpec(); } // __construct // **************************************************************************** } // end class // **************************************************************************** ?>

Some people look at this code and exclaim that because it is so small that I've done nothing but create an anemic data model. They fail to spot the amount of code which is inherited from the abstract table class. Those who look at this abstract table class then exclaim that because it is so large that it surely must be a God object. By reaching such conclusions simply by counting the number of methods or lines of code they are simply proving that they can count, but not that they can read or understand what they read. The abstract table class is large because it contains all the methods that could possibly be used in a table class. These methods are a mixture of invariant and variable methods as defined in the Template Method Pattern. The concrete class is small because all it need do is identify a particular database table with a particular structure. If you think about it carefully you should see that by combining something which is "too big" with something that is "too small" what I end up with is something which is "just right".

In my original implementation the constructor contained hard-coded definitions of all the fields/columns which existed in that table plus all the specifications (type, size, et cetera) for each of those fields. I later replaced this with a method call which loaded those definitions from an external file. This meant that I could regenerate the contents of that external file without having to modify the contents of any existing class file.

Every time I post a description of my approach to OOP on the web I am immediately subject to a barage of criticism and abuse. A selection of such criticisms, with my responses, can be found at:

Some of these criticisms are discussed below.

This statement, which is discussed (and dismissed) in greater detail in OO Design is incompatible with Database Design, shows a failure in the abilities of the author, not OOP. As far as I am concerned any competent programmer who knows how to design and build database applications should have absolutely no difficulty in switching to the OO paradigm, and any programmer who cannot is, in my book, simply not competent. I personally have written database applications using various different languages and paradigms - COBOL (procedural), UNIFACE (Model Driven and Event Driven) and PHP (OO) - and with each new language and paradigm I have been able to develop similar applications at a faster rate and therefore a lower cost. If I can do it then it cannot be that difficult. Those programmers who struggle are simply bad workmen who are blaming their tools.

As documented in What is/is not considered to be good OO programming, after publishing my views in an internet forum I received a torrent of damning criticism amongst which were these remarks:

1. Having a separate class for each database table is not good OO.

2. Abstract concepts are classes, their instances are objects. IMO The table 'cars' is not an abstract concept but an object in the world.

3. Classes are supposed to represent abstract concepts. The concept of a table is abstract. A given SQL table is not, it's an object in the world.

This person obviously did not understand what he wrote, otherwise he would have seen a very close correlation with my code.

| The concept of a table is abstract | That is why I have an abstract table class which contains methods and properties which can be shared by any unspecified database table. |

| A given SQL table is not, it's an object in the world. | That is why I create a concrete class for each physical table by combining the abstract table class with the specific details of each particular table. |

My use of an abstract table class which is inherited by every concrete table class has allowed me to implement the Template Method Pattern on a large scale. Every method called by a Controller on a Model is a template method where the superclass contains the unvarying parts of an algorithm, and each subclass can override the varying methods in order to add their own unique behavior at points of variability.

My abstract table class, which is so huge that it has been called a "God" class, is then inherited by every one of the 450+ table classes which exist in my main enterprise application. This means that each of my table classes is then quite small, which means that they have been called anemic classes. These people are applying an artificial rule which states that no class should have more than "N" methods, and no method should have more than "N" lines of code (where "N" is any number that you care to pull out of your hat on that particular day). Such a rule does not exist in my universe, so I ignore it. What matters to me is that I am sharing a huge amount of code through inheritance, and that, to me, is far more important than being able to count to 10. Unlike some of my critics I can actually count higher than 10 without having to take my shoes and socks off.

Jeff Atwood's article Why Objects Suck contains the following statement which indicates that providing polymorphism in a database application is very difficult:

A typical business problem is the converse of a typical object-oriented problem. Business problems are generally interested in a very limited set of operations (CRUD being the most popular). These operations are only as polymorphic as the data on which they operate. The Customer.Create() operation is really no different behaviorally than Product.Create() (if Product and Customer had the same name, you could reuse the same code modulo stored procedure or table name), however the respective data sets on which they both operate are likely to be vastly different. As collective industry experience has shown, handing polymorphic data with language techniques optimized for polymorphic behavior is tricky at best. Yes, it can be done, but it requires fits of extreme cleverness on the part of the developer. Often those fits of cleverness turn into fugues of frustration because the programming techniques designed to reduce complexity have actually compounded it.

If polymorphism relies on different objects sharing the same method signature, why on earth is he linking it to data instead of methods? What on earth is "polymorphic data"? If it requires "fits of extreme cleverness" to achieve polymorphism in a database application then does that make me extremely clever? I suggest that he follow my example and instead of using methods such as Customer.Create() and Product.Create() he use something like the following:

$object = new $table; $result = $object->insertRecord($_POST);

The value for $table is supplied at runtime, and can be the name of any of the 450+ tables in my application. This works because every one of those classes inherits from my abstract table class which contains the generic ->insertRecord() method. This method will take the data it is given (the entire contents of the $_POST array) and validate it using the column specifications for that table, and if that validation passes it will call the ->insertRecord() method on the Data Access Object for that particular DBMS in order to generate an SQL "insert" query to add that data to the database. This simple technique did not require "fits of cleverness", nor did it turn into "fugues of frustration". I have been using this technique for over 10 years, and it has definitely helped, not hindered, my ability to maintain and extend my ever-growing enterprise application which I sell as a package to corporations all over the world.

The most common reason I hear as to why OOP and relational databases don't mix is because SQL is not object oriented, but this is a pure red herring. HTML is not object oriented either, but that does not stop legions of programmers from writing web applications using OOP. All the essential features of OOP do actually exist in relational databases, but they have different names and are implemented differently. Below is a summary of the comparisons which I documented in Object Relational Mappers are Evil:

| Complaint | Response |

|---|---|

| Databases do not have classes. | A class is the blueprint that defines objects of a certain kind. In a database the schema for each table (the CREATE TABLE script) defines the blueprint for each record in that table. |

| Databases do not have objects. | An object is an instance of a class. In a database each table row is an instance of that table's schema. |

| Databases do not have encapsulation. | Encapsulation is the act of placing data and the operations that perform on that data in the same class. Data is defined using columns in a table's schema. Operations do not have to be defined for each table as every table can only be accessed using one of the four generic CRUD operations. |

| Databases do not have inheritance. | Inheritance is the act of combining one class definition with another. In a database this is achieved with a foreign key which allows you to combine the data from one table with another by using a JOIN clause in the SQL query. |

| Databases do not have polymorphism. | Polymorphism is enabled by having the same operations available in different objects. In a database the same CRUD operations are available for every table, so it is possible to construct an SQL query and do nothing but change the table name in order for it to work on a different table. |

| Databases do not have <enter feature here> | Who cares? In OOP this is just an optional feature anyway, and if it is optional then there is no obligation to either include it in your code or emulate it in the database.

OO does not include support for such things as transactions, commit and rollback, but why should it? These are implemented within the database, and all the code has to do is execute the relevant command in the database. |

This topic is discussed further in Object-Relational Mappers are Evil.

As you should able to see, the names are different and the implementation is different, but the effects are more or less the same.

One of the problems that is often put forward by traditionalists is that changes to the database structure are always cumbersome to implement as they require so many changes to so many different components. This to me is an obvious sign that their levels of coupling and cohesion are completely wrong, which means that they are not applying the principles of OOP correctly. Either that or they are applying what are actually the wrong principles.

In my methodology I ignore object aggregation and object composition, so I never have a class which is responsible for more than one database table. I also never have a separate property for each table column, so I can pass all the data around in a single array instead of having code which deals with each column individually. Because of these heretical steps any changes to the database structure can be dealt with quite simply by importing the changed structure into my Data Dictionary, then exporting the details to the application. This regenerates the table structure file but does not regenerate the table class file as this may have been customised since it was first created. This simple process keeps the two structures, database and software, always in sync, which means that I don't have this problem.

I have clearly demonstrated that the result of my approach is the production of software which exhibits loose coupling, high cohesion and high levels of reusability, which is supposed to be a sign of a proper implementation of OOP, so why is my work described as "bad" while theirs is "good"? I am clearly achieving the objectives of OOP while they are not, so why is my work described as "bad" while theirs is "good"?

My approach to OOP received the following complaint in a newsgroup:

If you have one class per database table you are relegating each class to being no more than a simple transport mechanism for moving data between the database and the user interface. It is supposed to be more complicated than that.

You are missing an important point - every user transaction starts life as being simple, with complications only added in afterwards as and when necessary. This is the basic pattern for every user transaction in every database application that has ever been built. Data moves between the User Interface (UI) and the database by passing through the business/domain layer where the business rules are processed. This is achieved with a mixture of boilerplate code which provides the transport mechanism and custom code which provides the business rules. All I have done is build on that pattern by placing the sharable boilerplate code in an abstract table class which is then inherited by every concrete table class. This has then allowed me to employ the Template Method Pattern so that all the non-standard customisable code can be placed in the relevant "hook" methods in each table's subclass. After using the framework to build a basic user transaction it can be run immediately to access the database, after which the developer can add business rules by modifying the relevant subclass.

Some developers still employ a technique which involves starting with the business rules and then plugging in the boilerplate code. My technique is the reverse - the framework provides the boilerplate code in an abstract table class after which the developer plugs in the business rules in the relevant "hook" methods within each concrete table class. Additional boilerplate code for each task (user transaction) is provided by the framework in the form of reusable page controllers.

If you look at Figure 1 you should see that the purpose of the software application is to sit between the user and the database. The user can send data to be stored in the database, or retrieve data from the database and have it displayed to the user in one format or another. This data may be massaged or manipulated in some way in either of the inbound or outbound journeys. The data never remains in the software, it simply passes through.

I have been building database applications for several decades in several different languages, and in that time I have built thousands of programs. Every one of these, regardless of which business domain they are in, follows the same pattern in that they perform one or more CRUD operations on one or more database tables aided by a screen (which nowadays is HTML) on the client device. This part of the program's functionality, the moving of data between the client device and the database, is so similar that it can be provided using boilerplate code which can, in turn, be provided by the framework. Every complicated program starts off by being a simple program which can be expanded by adding business rules which cannot be covered by the framework. The standard code is provided by a series of Template Methods which are defined within an abstract table class. This then allows any business rules to be included in any table subclass simply by adding the necessary code into any of the predefined hook methods. The standard, basic functionality is provided by the framework while the complicated business rules are added by the programmer.

The idea that this approach is too simple immediately tells me that too many of today's programmers are deliberately trying to make OOP more complicated than it need be, thus violating the KISS principle and ignoring the advice of Albert Einstein who said:

Everything should be made as simple as possible, but not simpler.

I'm afraid that I'm with Einstein on this one. Anyone who disagrees must be a member of the let's-make-it-more-complicated-than-it-really-is-just-to-prove-how-clever-we-are brigade and can be dismissed as a know-nothing charlatan. I can write effective software using nothing more than the bare minimum of OO features in the language, so I wonder why legions of others find it so difficult. I don't use any of the optional extras simply because I cannot find a use for them. They would force me to use more code, not less, and they would not add anything of value.

Other quotations in support of simplicity can be found in A minimalist approach to Object Oriented Programming with PHP.

This topic is also discussed in In the world of OOP am I Hero or Heretic?.

What exactly is the difference between Object Oriented code and procedural code? In his article All evidence points to OOP being bullshit John Barker says the following:

Procedural programming languages are designed around the idea of enumerating the steps required to complete a task. OOP languages are the same in that they are imperative - they are still essentially about giving the computer a sequence of commands to execute. What OOP introduces are abstractions that attempt to improve code sharing and security. In many ways it is still essentially procedural code.

I read that as saying the following:

OO languages are identical to procedural languages, but with the addition of encapsulation, inheritance and polymorphism.

Human beings think in a linear fashion, performing steps in a logical sequence one after the other. Computers execute code in a linear fashion, executing one instruction before following it with the next. Procedural code is executed in a linear fashion. OO code is executed in a linear fashion. OO code uses classes and objects while procedural code does not. The same computer processor can execute both procedural code and OO code because it does not know there is a difference, therefore there is no practical difference.

Some people seem to think "proper" OO is not as simple as taking procedural code and putting it in classes so that you can take advantage of inheritance and polymorphism. I could not disagree more. I have taken my years of experience of writing database applications in procedural languages and have successfully applied the principles of OOP to write identical applications with more reusability, less code, and which are quicker to write and easier to maintain and extend. By doing so I have achieved the objectives of OO, therefore how can anyone say that my implementation of OO is wrong? Different, maybe. Wrong, no.

According to Yegor Bugayenko in his article Are You Still Debugging? the differences between procedural and OO programming can be expressed as follows:

Pardon my French, but that is a complete load of bollocks balderdash. It does not matter two hoots what name you give to a method/function provided that it is meaningful and not too long. The name should identify what the method does so that you have a very good idea of what is supposed to happen when you call it. Changing the name so that it is centered around a noun instead of a verb has no magical effect on how the underlying code is executed. The name could even be a string of random characters that are meaningless to a human being, but the computer would not care. It would still allow the method/function to be called, and it would still execute the code within that method/function in the same linear fashion.

Opinions such as those simply prove that that the author has lost the plot. OOP involves the creation of objects which have properties (data) and methods (operations). Objects are instantiated from classes which represent entities. Methods identify operations which can be performed on an object. Am I the only one who sees that entities are nouns while operations are verbs? This should be obvious if you look at the following two lines of PHP code:

$result = $object->method(); $result = $noun->verb();

Saying that OO code is different from procedural code is plain wrong. You can take code from a procedural function and wrap it in a class and it will still work in exactly the same way. Nothing magical happens to the way in which the code is executed just because it is in a class method. If I write code which is oriented around objects, then by definition it is Object Oriented. The only "trick" in using OO is to identify how many classes you need you complete a task, what each class does, and how you link those classes together. This topic is discussed in What type of objects should I create?

In my methodology I create a separate class for each database table. This class contains the business rules and the data structure of that table, with all its basic operations inherited from my abstract table class. Each resulting object then holds the data for a particular database table and provides all the operations that can be performed on that data. According to Yegor Bugayenko in his article Getters/Setters. Evil. Period. this idea is totally wrong:

Objects are not "simple data holders". Objects are not data structures with attached methods. This "data holder" concept came to object-oriented programming from procedural languages, especially C and COBOL. I'll say it again: an object is not a set of data elements and functions that manipulate them. An object is not a data entity.

Pardon my French, but that is a complete load of bollocks balderdash. Objects most definitely are data data structures with attached methods, as that matches the definition of encapsulation. It also matches the definition of the Table Module from Martin Fowler's Patterns of Enterprise Application Architecture (PoEAA) in which he says:

One of the key messages of object orientation is bundling the data with the behavior that uses it.

Later in the same article Yegor says:

In true object-oriented programming, objects are living creatures, like you and me. They are living organisms, with their own behavior, properties and a life cycle.

WTF!!! How can objects in the software be living organisms? Surely objects are just representations or models of those things with which the software must interact, and not the physical objects themselves. He offers the following explanation:

We are differentiating the procedural programming mindset from an object-oriented one. In procedural programming, we're working with data, manipulating them, getting, setting, and deleting when necessary. We're in charge, and the data is just a passive component. The dog is nothing to us - it's just a "data holder". It doesn't have its own life. We are free to get whatever is necessary from it and set any data into it. This is how C, COBOL, Pascal and many other procedural languages work(ed).

On the contrary, in a true object-oriented world, we treat objects like living organisms, with their own date of birth and a moment of death - with their own identity and habits, if you wish. We can ask a dog to give us some piece of data (for example, her weight), and she may return us that information. But we always remember that the dog is an active component. She decides what will happen after our request.

This is yet another load of balderdash. He tries to justify it with the following example:

Can a living organism have a setter? Can you "set" a ball to a dog? Not really. But that is exactly what the following piece of software is doing:Dog dog = new Dog(); dog.setBall(new Ball());How does that sound? Can you get a ball from a dog? Well, you probably can, if she ate it and you're doing surgery. In that case, yes, we can "get" a ball from a dog. This is what I'm talking about:Dog dog = new Dog(); Ball ball = dog.getBall();Or an even more ridiculous example:Dog dog = new Dog(); dog.setWeight("23kg");Can you imagine this transaction in the real world? :) Start thinking like an object and you will immediately rename those methods. This is what you will probably get:Dog dog = new Dog(); dog.take(new Ball()); Ball ball = dog.give();Now, we're treating the dog as a real animal, who can take a ball from us and can give it back, when we ask. Besides that, object thinking will lead to object immutability, like in the "weight of the dog" example. You would re-write that like this instead:Dog dog = new Dog("23kg"); int weight = dog.weight();The dog is an immutable living organism, which doesn't allow anyone from the outside to change her weight, or size, or name, etc. She can tell, on request, her weight or name. There is nothing wrong with public methods that demonstrate requests for certain "insides" of an object. But these methods are not "getters" and they should never have the "get" prefix. We're not "getting" anything from the dog. We're not getting her name. We're asking her to tell us her name. See the difference?

By arguing about what method names to use I consider that this person is wasting too much time on petty, nit-picking, inconsequential trivialities. It does not matter what the method name is provided that it describes what happens when that method is called as succinctly as possible. What it does must be obvious, How it does it does not matter.

This person is so far wide of the mark he is not even on the same planet. A database application is software which communicates with passive objects in a database, not physical objects in the real world. These database objects are not living organisms, they are simply collections of data which are organised into tables and columns. A table is a passive object in that it can only receive requests and return a response - it can never generate a request or do anything on its own.

If I own a business which deals with dogs, such as buying, selling or breeding dogs, then if I have a computer system at all it will do nothing but record information about the dogs in my possession in order to help me run my business. Such a system would not interact with any living dogs, it would merely sit in the middle between a human being on a monitor at one end and a database at the other. All the software would do is pass information between the user and the database. This also means that although the physical object may be able to do certain things, or have certain things done to it, those "things" will not be made available as operations or methods inside the application as they would be totally irrelevant. For example, a real dog may be able to walk, run, roll over, eat, sleep and defecate, but would a sensible programmer build these operations into a business application?

Actions like giving or taking a ball from a dog would not exist in the computer application as they would not be relevant to the business. There would be no user transaction called "Give ball to dog" or "Take ball from dog". As for asking a dog for its name it cannot tell you as it cannot speak. If you want to know the name of a dog then you would look at the dog's name tag, or use an RFID scanner to obtain its identity from a microchip which has been embedded under its skin. As for obtaining a dog's weight, you never ask the dog itself - you put on the dog on a set of scales, you read off the number, and you update the dog's record in the database. In this process the dog itself is not an active component - it does not tell you its weight, it allows itself to be weighed. A dog does not know that it has weight, it cannot measure its weight, and it cannot tell you its weight. In this context "weight" exists as nothing more than a value on a set of scales and a column in the DOG table. In order to set a dog's weight you perform an UPDATE operation on that dog's record in the database. In order to see a dog's recorded weight you perform a SELECT operation which retrieves that dog's data from the database. Remember that these operations are performed on the DOG table and not a living dog.

Each task in a database application does something with the data in a database table, and this "something" is limited to just four operations - Create, Read, Update and Delete. These operations do not act on one column at a time, they act on sets of columns in one or more records. A single READ operation is capable of retrieving any number of columns from any number of tables, an UPDATE operation can change the values in any number of columns in any number of rows in a single table, but the INSERT and DELETE operations affect whole rows of data, not just individual columns. When one of my Controllers receives a request it passes it on to a Model object using a corresponding method. The Model may perform some of its own processing before it passes that request onto the DAO which constructs and executes the relevant SQL query on the database. The query produces a result which is returned to the Model, which may perform some of its own processing before the result is returned to the user in the form of a View. Below is a brief overview of the method names and the corresponding database operation:

| Controller action | Database action |

|---|---|

| $model->insertRecord($_POST) | INSERT INTO <tablename> ..... |

| $model->getData($where) | SELECT ... FROM <tablename> ..... WHERE $where |

| $model->updateRecord($_POST) | UPDATE <tablename> SET ..... WHERE $where |

| $model->deleteRecord($_POST) | DELETE FROM <tablename> WHERE where |

You might understand this better by looking at some UML diagrams which go into greater detail.

There would be no need for the application to have separate user transactions to update or view individual columns from the DOG table as the user interface would show all the dog's data in a single screen. There would therefore be no need to create methods to work on individual columns as each user transaction would use SQL queries that operated on multiple columns at the same time. Each screen would show all the columns from a record in the DOG table, all the columns would be sent to the software in the POST variable, all the columns would be validated in a single operation, and all these columns would then be passed to the DAO so that it could construct and execute a single query to either INSERT or UPDATE a record in the DOG table. A wise person would therefore see that the database operates on sets of columns, not individual columns, so having methods in the software which operate on individual columns would immediately put the software at odds with the database, so would not be a good idea. I was used to the concept of working with datasets before I switched to an OO-capable language, and I have continued using that concept since switching with great success.

If you think that his idea of treating objects as living creatures is absurd, things get worse in his article when he repeats a quote from David West:

Step one in the transformation of a successful procedural developer into a successful object developer is a lobotomy.

I assume by this statement he means that an OO developer is just like a procedural developer, but without a working brain. Now that I can believe.

Although other developers are constantly telling me that I am writing what they call "legacy code", they are missing the point concerning enterprise applications. Large corporations tend to shy away from brand new leading edge or bleeding edge software, something which has been recently developed using the latest buzzword or shiny gizmo. They like their software to be mature, to be tried and tested, to be low risk, to be proven, to have a pedigree. In other words, large corporations prefer legacy software, and as I develop software specifically for use by large corporations my first priority is to please them and not a bunch of know-nothing developers.

Many developers seem to think that legacy code (i.e. code that has not been freshly written to today's fashionable standards) is automatically bad because it is old. I have got some ground-breaking news for you guys - good code does not deteriorate with age. It does not go rusty, it does not slow down, it does not fall apart. The only way for good code to become bad is when it is attacked by a bad programmer. The code I wrote over a decade ago in PHP4 still runs today in PHP7, apart from one minor change. The computer processor does not execute the code differently because of its age because it does not know its age. When it is given a piece of code to execute it does not know whether that code was written 10 years ago or 10 seconds ago.

I do not change my code to use every new gizmo, gadget or gimmick that appears in the language simply because it has become available. I see no reason to change code which works perfectly well just to achieve the same result in a different manner. The only thing that has changed over the years with my framework and my main enterprise application is that I have modified or added some areas of functionality, and being a halfway-decent programmer I have managed to do this without screwing up.

When I first starting using PHP in 2001 it was with version 4, and I quickly learned to write effective OO software using nothing more than encapsulation, inheritance and polymorphism which PHP 4 fully supported. I used this to create my development framework, which was based on similar frameworks that I had created in previous languages, and I developed several applications using that framework. When PHP5 was released in 2006 I still had to support my PHP4 customers, so I did the bare minimum to ensure that my codebase could run in both PHP4 and PHP5. This meant that the additional OO features that were added in PHP5 did not appear in my codebase as they could not be used by my PHP4 customers. I started developing my major enterprise application in January 2007, and this is still being used, maintained and actively supported to this day. Even though I no longer have any PHP4 customers I have never upgraded my codebase to include the additional features of PHP5 as I cannot find any practical use for them. Even though my codebase now runs in PHP7 it still looks like my original PHP4 code. It would take an enormous amount of effort to rewrite my code to incorporate all the new OO features, but as it would not make my software run any better I do not see the point. My attitude is that if it ain't broke, don't fix it.

Those programmers who complain that code which I wrote over ten years ago and which is still is use today must be bad because of its age are inadvertently admitting to the fact that they cannot write code which lasts that long. They write such crap that it has to be thrown away and rewritten after just a few short years. How many of them have ten year old applications which are still running?

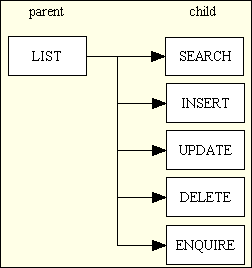

An enterprise application is primarily concerned with putting data into and then getting data out of a database. Such an application will have hundreds, if not thousands, of tasks (user transactions) which perform a unit of work for the user, and each of these will (except in rare circumstances) involve one or more records in one or more database tables. As has been explained earlier, the only operations which can be performed on a database table are INSERT, SELECT, UPDATE and DELETE, commonly known as CRUD, but it would be extremely naive to say that every one of those tasks is simple. In my decades of experience with writing database applications the term "simple" can only be used when describing a common family of forms which can be seen, in one form or another, in every single database application that has ever been written, such as that shown in Figure 5:

Figure 5 - A typical Family of Forms

This family consists of 6 simple forms - List1, Add1, Enquire1, Update1, Delete1 and Search1 - which can be built to operate on any table in any database. Using my Data Dictionary it is possible to create and run this forms family for a database table in just 5 minutes, all without writing a single line of code - no PHP, no HTML and no SQL. Contrast this with the amount of code that has to be written using other "proper" frameworks or ORMs, as shown in A minimalist approach to Object Oriented Programming with PHP. While the result of this exercise would indeed result in 6 simple screens, these represent only 6 of the 40+ Transaction Patterns which are available in my catalog. These additional patterns offer different ways of accessing the database for different purposes, such as maintaining many-to-many relationships, which are far beyond anything I have seen in other CRUD frameworks.