I did not pull the design of my RADICORE framework out of thin air when I started programming in PHP, it was just another iteration of something which I first designed and developed in COBOL in the 1980s and then redeveloped in UNIFACE in the 1990s. I switched to PHP in 2002 when I realised that the future lay in web applications and that UNIFACE was not man enough for the job. PHP was the first language I used which had Object-Oriented capabilities, but despite the lack of formal training in the "rules" of OOP I managed to teach myself enough to create a framework which increased my levels of productivity to such an extent that I judged my efforts to be a great success. In the following sections I trace my path from being a junior programmer to the author of a framework that has been used to develop a web-based ERP application that is now used by multi-national corporations on several continents.

In 1971 when I was interviewed for my first job in IT (or Data Processing as it was known in those days) my interviewer was not interested in any academic qualifications. He gave me an aptitude test to see if I had a logical mind as he considered that to be more important than anything else. Although I started off as a computer operator I taught myself COBOL in order to modify a program that was used in the operations department. This was tricky as it was unstructured, undocumented and badly written, but somehow I succeeded. This impressed my boss so much he arranged for me to move into a programming department where my first task was to fix bugs in a log file analysis program. This was much larger and more complicated, but easier to follow as it had a proper structure. Once I found out what the program did I discovered that some of the bugs were in the design as the original author did not understand how the logs were written so the structure of his design was out of step with the structure of the problem. That is when I learned the importance of being able to combine logic and structure - you need to work out the logic and structure of the problem you are trying to solve before you can work out the logic and structure of the solution you are trying to write. A later course in Jackson Structured Programming reinforced the view that you needed to analyze the structure of the input data before you could produce a program design based on those data structures, so that the program control structure handled those data structures in a natural and intuitive way.

My pre-PHP history is explained in more detail in My Career History

It is important to note that programming on a UNIVAC mainframe did not involve screens and keyboards, it was coding pads and punched cards. We wrote our code on sheets of paper from a coding pad, sent those sheets to the punch room where the operators used keypunches to turn them into punched cards which were then returned to us. After checking these cards we would fill out a job sheet which we would attach to those cards, then submit the cards and job sheet to the computer room so that the operators could load in the deck of cards and schedule its run time. The results were printed on fan-fold paper which we collected from the computer room after the job was run. Everything in those days was run as batch jobs, there was no online processing. We usually worked on our code during the day, which involved a lot of desk checking, submitted our jobs for processing overnight, then collected the printed output the next morning.

As there was no online processing there was also no online debugging. If our program aborted it would print a dump of the program's memory which had one part for the data and another part for the code, and we had to work out from the memory addresses in use at the time of the crash what went wrong. This was a long and tedious task. As a debugging aid all we could do was print out certain values while the program was running to give an indication of where it was in the code and what it was doing.

COBOL is a language where an executable program is constructed in several stages. The source code, which is maintained in a text file, has to be compiled into intermediate files known as Relocatable Binary. There is one of these intermediate files for each file of source code, thus allowing a large program to be constructed from smaller pieces. A final link process would then assemble these intermediate files into an executable program file. One of the intermediate files would have to be identified as the starting (or MAIN) procedure while all the others would be treated as subprograms.

When I joined my first development team as a junior COBOL programmer we did not use any framework or code libraries, so every program was written completely from scratch. As I wrote more and more programs I noticed that there was more and more code that was being duplicated. There was no central library of reusable code in any of these projects, so the only way I found to deal with this when writing a new program was to copy the source code of an existing program which was similar, then change all those parts which were different. It was not until I became a senior programmer in a software house that I had the opportunity to start putting this duplicated code into a central library so that I could define it just once and then call it as many times as I liked, thus increasing my productivity. When I became team leader I made my personal library available to the whole team so that they could also become more productive. Once I had started using this library it had a snowball effect in that I found more and more pieces of code which I could convert into a library subroutine. This is now documented in Library of Standard Utilities. I took advantage of an addition to the language by writing Library of Standard COBOL Macros which allowed a single line of code to be expanded into multiple lines during the compilation process. Later on my personal programming standards were adopted as the company's formal COBOL Programming Standards.

While I did some COBOL programming on a UNIVAC 1100 mainframe followed by a Data General Eclipse, the vast majority of my work was done on a Hewlett Packard mini-computer using VIEW forms processing (later named to VPLUS) and the IMAGE database (later named to TurboIMAGE).

VPLUS forms operated with VDUs which operated in block mode. The screen had first to be designed and compiled using a separate program called FORMSPEC which kept these details in a separate disk file known as a forms file. Each screen/form was given a unique name and was comprised of text for labels and headings and fields for data. A field was a piece of text delimited by a pair of '[' and ']' characters into which the user could enter data. The delimiters could be made invisible on the screen if required. After this you went into the field editing menu which allowed you to define the properties of each field. The size was automatically limited to the number of characters between the '[' and ']' delimiters, but you could define a user-friendly field name, its data type and any processing rules.

Block mode meant that once a form/screen was displayed on the VDU the user was free to move the cursor around from field to field to enter data, but there would be no communication with the software until the ENTER key was pressed, at which point all the data would be sent as one contiguous block.

The Forms Management System which was available on HP mini-computers was called VIEW (later VPLUS) which could be accessed using the following set of intrinsics (system functions):

As it was often necessary to call a group of instrinsics, with error checking, to perform an operation I grouped these intrinsics into a series of wrapper functions which could be accessed using a collection of macros (pre-compiler directives) in order to cut down on the amount of typing.

Note that the calls to VGETBUFFER and VPUTBUFFER had to be hard-coded as each form required a separate definition of the data buffer to identify all the fields on that form. It was not possible to have a single call which would accept different buffers. If this buffer ever got out of step with the field definitions in the compiled form then the data would become corrupted.

In my early days it was common practice to build large programs which could handle several user transactions which used different forms, but this meant that the logic for each form and user transaction was mixed with the logic for other forms and user transaction in the same source file. However, there was a limit to the size that the program could use in memory, so the practice was to split the processing across several separate programs, such as one for customer details, a second for product details, and a third for order details. However, there was no method for sharing data between the different programs, so when building an order you had to look up the customer number in the customer program, look up the product id in the product program, then manually enter them into the order screen.

This situation was solved by separating the code into segments so only one segment was loaded into memory at a time. When code from another segment was required the current segment was swapped out to disk in order to make room for the new segment. Care had to be taken when deciding which piece of code went into what segment to minimise the amount of segment swapping. This then allowed the code for the customer, product and order programs to be assembled into a single executable program, and to pass around the customer id and product id in a global variable. I then decided to make each program less complicated by limiting each one to a single form and a single user transaction.

The standard database management system (DBMS) which was available on HP mini-computers was called IMAGE (later TurboIMAGE). This was a Network Database which had two basic types of table - a master and a detail. Unlike a Relational Database did not have an SQL interface. Instead it required the use of a set of function calls known as TurboIMAGE intrinsics, with a separate function for each operation, such as:

Calling these intrinsics was simplified by the provision of a set of macros (pre-compiler directives).

Note that the DBGET intrinsic could only read columns from a single dataset (table). The list argument was a comma-separated list of column names and the buffer argument was a composite data type in which the values for each of those columns would be placed. This means that JOINs to other tables were not possible.

In order to handle the data from a file - any file, be it database or non-database - it was necessary to define a record structure in the DATA DIVISION which defined its component parts and the sizes and types for each of those parts. When data was read in the entire structure was replaced in one go, so if there were any discrepancies between the physical structure of the record and its perceived structure in the software then some data corruption would occur. Because all data coming into the program, either from a screen or the database, was received in a predefined composite data type there was no need to include any code to perform type checking as the compiler would not allow any item to be updated with data of the wrong type.

By using standard code from a central library it made each programmer more productive as they had less code to write, and it eliminated the possibility of making some common mistakes. One of the common types of mistake that was eliminated was keeping the definition of certain data buffers, such as those for forms files (screens) and database tables, in line with their physical counterparts. This was taken care of with the COPYGEN utility which read the external definitions and generated text files which could then be added to a copy library so that the buffer definitions could be included into the program at compile time. Incorporating changes into the software therefore became much easier - change the forms file or database, run the COPYGEN utility, rebuild the copy library from the generated text files, then recompile all programs to include the latest copy library entries.

One of the first changes I made to what my predecessors had called "best practice" was to change the way in which program errors were reported to make the process "even better". Some junior programmers were too lazy to do anything after an error was detected, so they just executed a STOP RUN or EXIT PROGRAM statement. The problem with this was that it gave absolutely no indication of what the problem was or where it had occurred. The next step was to display an error number before aborting, but this required access to the source code to find out where that error number was coded. The problem with both of these methods was that any files which were open, and this included the database, the formsfile, any KSAM files any other disc files, which were not explicitly closed in the code would remain open. This posed a problem if a program failed during a database update which included a database lock as the database remained both open AND locked. This required a database administrator to logon and reset the database.

The way that one of my predecessors solved this problem was to insist that whenever an error was detected in a subprogram that instead of aborting right then and there that it cascaded back up the stack to return control to the starting program (where the files were initially opened) so that they could be properly closed. This error procedure was also supposed to include some diagnostic information to make the debugging process easier, but it had one serious flaw. While the MAIN program could open the database before calling any subprograms, each subprogram had the data buffers for each table that it accessed defined within its own WORKING-STORAGE section, but when that subprogram performed an exit its WORKING-STORAGE area was lost. This was a problem because if an error occurred in a subprogram while accessing a database table then the database system inserted some diagnostic information into that buffer, but when the subprogram returned control to the place from which it had been called then this buffer and the diagnostic information which it contained was lost, thus making the error report incomplete and virtually useless. This to me was unsatisfactory, so I came up with a better solution which involved the following steps:

This error report showed what had gone wrong and where it had gone wrong using all the information that was available in the communication areas. As it had access to the details for all open files it could close them before terminating. The database communication area included any current lock descriptors, so any locks could be released before the database was closed. Because of the extra details now included in all error reports this single utility helped reduce the time needed to identify and fix bugs.

As a junior programmer the standard practice for building software to maintain database tables was to create a single program to handle all the Create, Read, Update and Delete (CRUD) operations. This required the program to handle several different screens and required a mechanism which allowed the user to switch from one mode of operation to another. This was OK for simple tables without any relationships, but once you started to add child tables it increased the complexity by adding more operations which required more code and more screens. This increase in complexity also increased the number of bugs, so after a great deal of thought I realised that the only way to remove this complexity was to take the large and complex programs which performed multiple tasks and split them into small and simple programs which performed a single task each. This idea is documented in Component Design - Large and Complex vs. Small and Simple.

As well as reducing the number of bugs by reducing the complexity, this had an added bonus later on by making it easier to introduce Access Control at the user/transaction level as the necessary checking could be done within the framework BEFORE the application program was called instead of within the application program itself AFTER it had been called.

Originally all the error messages and function key labels were defined as strings of text, but it became a tedious exercise if that text needed to be changed. Fortunately the HP3000 operating system offered a facility known as a message catalog which allowed text to be defined in a disk file with an identity number so that the the program could supply a number and the system would automatically read the message catalog and convert the code number into text. This became incredibly useful when we were asked by one client to have these messages displayed in a different language as all we had to do was create a different version of the message catalog for each additional language.

Up until 1985 the standard method of building a database application which contained a number of different user transactions (tasks) was to have a logon screen followed by a series of hard-coded menu screens each of which contained a list of options from which the user could select one to be activated. Each option could be either another menu or a user transaction. Due to the relatively small number of users and user transactions there was a hard-coded list of users and their current passwords, plus a hard-coded Access Control List (ACL) which identified which subset of user transactions could be accessed by each individual user. This arrangement had several problems:

The above arrangement was thrown into disarray in 1985 when, during the design of a new bespoke application for a major publishing company, the client's project manager specified a more sophisticated approach:

I spent a few hours one Sunday in designing a solution. I started building it on the following Monday, and by Friday it was up and running. My solution had the following attributes:

The ability to jump to any transaction from any transaction presented a series of little problems which I had to solve one by one.

The first problem was to provide a way for the system administrator to configure the jump-to points for each transaction and to make these options visible to the user. For this I created a new database table called D-OTHER-OPTIONS with a set of maintenance screens. This would cause these options to appear in the function key labels at the bottom of the screen when the parent transaction was being run. To activate another transaction all the user had to do was press the relevant function key. Note that options on this list which the user was not able to access were automatically filtered out to make them invisible and non-selectable.

The second problem was memory consumption. Each subprogram had its own areas of memory it used which were defined in the DATA-DIVISION and the PROCEDURE-DIVISION, so when a new subprogram was called its memory requirements were added to those which were already in use. Unfortunately there was an upper limit to the size of each area, so if you chained too many CALLs, one after the other, you would exceed the memory limit and the program would abort. The only way to prevent this from happening was to exit the current program, thus releasing its memory, before calling the next one. This was the standard method which the user wished to avoid.

The third problem was to make this exit-then-call process invisible to the user. For this I devised a process which I called Transaction Auto Selection. This would load the identity of the selected transaction into MENU-AUTO-SELECT (part of COMMON-LINKAGE) before exiting the current transaction, thereby releasing all the memory which had been allocated to it. It would return control to the menu program which would spot a value in MENU-AUTO-SELECT which it would then activate. Easy Peasy Lemon Squeezy.

The fourth problem was that after running a new transaction the user would normally like to return to the previous transaction and have it resume from where it left off. In order to provide the identity of the previous transaction I had to maintain a pseudo hierarchy, a list of call statements, where the current transaction was always at the end of the list. New transactions could then be appended to this list. If the PREVIOUS TRAN function key was pressed the current transaction would be dropped off the end of the list, which converted the previous transaction into the current transaction which could then be restarted. If the EXIT function key was pressed then control would be returned to the first transaction in the list.

The fifth problem was when a transaction was restarted it did not automatically resume from where it left off, it started from the very beginning of its processing. To solve this I created a mechanism to Save and Restore screen contents so that before leaving the transaction it would save the pointers to the database record(s) on the current screen, and when being restarted it could rebuild the screen after reading the database using those pointers. This was possible because of my decision to have each transaction only be responsible for a single screen.

In the first version it was necessary for each new project to combine the relocatable binaries of this new framework with the relocatable binaries of their application subprograms in order to create a single executable program file. It was also necessary to append the project's VPLUS form definitions to the same disk file used by the framework. When I tried to separate the two forms files I hit a problem as the VPLUS software was not designed to have more than one file open in the same program at the same time. The solution was to design a mechanism using two program files, one for the MENU program and a second called MENUSON for the application, with data being passed between them using an Extra Data Segment (XDS) which is a portion of shared memory. After each application program was compiled it was linked with the MENUSON component in order to create an executable program file.

This framework was on top of a library of subroutines and utility programs which I developed earlier. This included a COPYGEN program which produced copylib members for both the IMAGE/TurboIMAGE database and the VIEW/VPLUS forms which helped reduce common coding errors by mistakes being made when altering the structure of a database table or a VIEW/VPLUS forms file and failing to update the appropriate data buffer correctly. Calling the relevant intrinsics (system functions) for these two pieces of software was made easy by the creation of a set of subroutines for accessing VPLUS forms plus a set of macros (pre-complier directives) for accessing the IMAGE database. All these documents are available on my COBOL page.

After that particular client project had ended, my manager, who was just as impressed with my efforts as the client, decided to make this new piece of software the company standard for all future projects as it instantly increased everyone's productivity by removing the need to write a significant amount of boilerplate code from scratch. This piece of software is documented in the following:

Another situation I was asked to deal with concerned the practice of some users to walk away from their monitors while it was still logged on to the application, which meant that some other person could use that terminal to do something which they shouldn't. The answer was to introduce a timeout value which would simulate the pressing of the EXIT key if here was no activity on the screen in the time specified.

This feature was later extended to include a System Shutdown facility. This allowed the system supervisor to issue a shutdown warning to all active users, and then to forcibly terminate their sessions if they had not logged off voluntarily.

Here I am referring to the movement to Reduced Instruction Set Computing (RISC) which was implemented by Hewlett-Packard in 1986 with its PA-RISC architecture. Interestingly they allowed a single machine to compile code which ran under the Complex Instruction Set Computing (CISC) architecture in what was known a "compatibility mode", or it could be compiled to run under the RISC architecture using "native mode". This required the use of a different COBOL compiler and an object linking mechanism as well as changes to some function calls. As a software house we had to service clients who had not yet upgraded their hardware to PA-RISC, but we did not want to keep two versions of our software.

This is where my use of libraries of standard code came in useful - I was able to create two versions of this library, one for CISC and another for RISC, which contained the function calls which were correct for each architecture. I then created two jobstreams to compile the application, one for CISC and another for RISC, which then took the same source code and ran the relevant compiler, library and linker to produce a program file for the desired architecture. This then hid all the differences from the developers who did not have to change their source code, but gave the client the right program for their machine.

More details can be found in The 80-20 rule of Simplicity vs Complexity.

While everybody else regarded this issue as a "bug" we developers saw it as an "enhancement" to a method that had worked well for several decades but which needed to be changed because of hardware considerations.

The origin of this issue was the fact that in the early days of computing the cost of hardware was incredibly expensive while the cost of programmers was relatively cheap. When I started my computing career in the 1970s I worked on UNIVAC mainframe computers which cost in excess of £1million each, and this meant that we had to use as few bytes as possible to store each piece of data. This meant that dates were usually stored in DDMMYY format, taking up 6 bytes, where the century was always assumed to be "19". It was also assumed that the entire system would become obsolete and rewritten before the century changed to "20".

In the 1980s while working with HP3000 minicomputers we followed the same convention, but as storing values in DDMMYY format made it tricky to perform date comparisons I made the decision, as team leader and database designer, to change the storage format to YYMMDD. The IMAGE database did not have an SQL interface, so instead of being able to sort records by date when they were selected we had to ensure that they were sorted by date when they were inserted. This required defining the date field as a sort field in the database schema.

Instead of storing YYMMDD dates using 6 bytes I thought it would be a good idea, as dates were always numbers, to store them as 4-byte integers, thus saving 2 bytes per date. That may not sound much, but saving 2 bytes per record on a very large table where each megabyte of storage cost a month's salary was a significant saving. This is where I hit a problem - the database would not accept a signed integer as a sort field as the location of the sign bit would make negative numbers appear larger than positive numbers. This problem quickly disappeared when a colleague pointed out that instead of using the datatype "I" for a signed integer I could switch to "J" for an unsigned integer. The maximum value of this field (9 digits) also allowed dates to be stored using 8 digits in CCYYMMDD format instead of the 6 digits in YYMMDD format. As I had already supplied my developers with a series of Date Conversion macros it was then easy for me to change the code within each macro to include the following:

IF YY > 50 CC = 19 ELSE CC = 20 ENDIF

This worked on the premise that if the YY portion of the date was > 50 then the CC portion was 19, but as soon as it flipped from 99 to 00 then the CC portion became 20.

This meant that all my software was Y2K compliant after 1986. The only "fix" that users of my software had to install later was when the VPLUS software supplied by Hewlett Packard, which handled the screen definitions, was eventually updated to display 8-digit dates instead of 6-digit dates.

In the 1990s my employer switched to a proprietary language called UNIFACE which is a Component-based and Model-driven language. It provided a richer Graphical User Interface (GUI) which included checkboxes, dropdown lists and radio buttons which were not available in FORMSPEC.

It was based on the Three Schema Architecture with the following parts:

UNIFACE was the first language which allowed us to access a relational database using the Structured Query Language (SQL). The advantage of UNIFACE was that we did not have to write any SQL queries as they were automatically constructed and executed by the Database Driver. The disadvantage of UNIFACE was that these queries were as simple as possible and could only access one table at a time. This meant that writing more complex queries, such as those using JOINS, was impossible unless you created an SQL View which could then be defined in the Application Model and treated as an ordinary table.

In UNIFACE you first defined entities in the built-in database known as the Application Model where an "entity" equated directly with a "database table". For each table you then defined the various fields (table columns) with their names, data types and sizes. You also identified which field(s) were in the primary key and which were in any candidate keys. You also defined any relationships between tables. The contents of the Application Model could then be exported to generate the CREATE TABLE scripts which could then be run in the DBMS to create the physical tables in the application database. Note that this did not create any foreign key constraints as all referential integrity was handled in the UNIFACE runtime.

Note that I reversed this process in my subsequent PHP implementation - I built the physical database first, then imported each table's structure into my own version of the Application Model which I called a Data Dictionary. Within this Data Dictionary I could edit the details to provide more information after which I could export them to a disk file called a table structure file which was loaded into each table object using standard code in the constructore of each concrete table class.

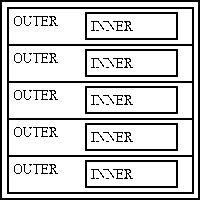

To create an online form you used the Graphical Form Painter (GFP), which was built into the Uniface Development Environment (UDE), to create form/report components which identified which entities and fields you wished to access. When using the GFP the whole screen is your canvas onto which you paint rectangles called frames, either an entity frame or a field frame. You then associate each frame with an object in the Application Model starting with an entity as shown in Figure 1. Inside each entity frame you can either paint a field from that entity or another entity frame for a different table, as shown in Figure 2. If you construct a hierarchy of entities within entities this will cause UNIFACE, when retrieving data, to start with the OUTER entity then, for each occurrence of that entity, use the relationship details as defined in the Application Model to retrieve associated data from the INNER entity. These two entities can be painted with the Parent entity first, as shown in Figure 3, or the Child entity first, as shown in Figure 4. After painting all the necessary entity and field frames the developer can then insert proc code into any of the entity or field triggers in order to add business logic. Default proc code which had been defined in the Application Model could then be either inherited or overridden in any form component.

| This will show the data for a single occurrence. |

|

| This will show the data for a multiple occurrences. |

|

| This shows two entities in a parent-child relationship. There is one OUTER occurrence of the parent and multiple INNER occurrences of the child.

This will require two SQL queries, one for the OUTER and one for the INNER. |

|

| This shows two entities in a parent-child relationship. There are multiple OUTER occurrence of the child and a single INNER occurrence of each parent. Note that each INNER could be a different row.

Note that with UNIFACE each entity would have to be read separately, which leads to the N+1 problem. With PHP the two tables could be read in a single query on the OUTER table which includes a JOIN to the INNER table. |

|

Just as in COBOL which used FORMSPEC, all the forms/screens used in UNIFACE had to be designed and then compiled using a special piece of software known as the Graphical Form Painter (GFP) which extractd data from the Application Model. This meant that each screen knew the datatype for each field on the screen, so it would not accept any invalid values. Unlike in COBOL where the compiled forms were stored in a separate file, which meant that you had to access the contents of this file by calling a number of different intrinsics (functions) from within your code, with UNIFACE you actually ran the compiled form component. This then displayed the form as it had been designed, and used icons/buttons in the menu bar to perform operations such as RETRIEVE and STORE. As the datatype of each field had been specified when the form was designed it was therefore impossible to input a value of the wrong type. If in your code you tried to overwrite any value with another value of the wrong type then the form would fail when you tried to compile it.

After I had learned the fundamentals of this new language I rebuilt my development framework. I first rebuilt the MENU database, then rebuilt the components which maintained its tables. After this I made adjustments and additions to incorporate the new features that the language offered. This is all documented in my User Guide.

I started with UNIFACE Version 5 which supported a 2-Tier Architecture with its form components (which combined both the GUI and the business rules) and its built-in database drivers. UNIFACE Version 7 provided support for the 3-Tier Architecture by moving the business rules into separate components called entity services, which then allowed a single entity service to be shared by multiple GUI components. Each entity service was built around a single entity (table) in the Application Mode, which meant that each entity service dealt with a single table in the database. It was possible to have code within an entity service which accessed other database tables by communicating with those table's entity services. Data is transferred between the GUI component and the entity service by using XML streams. That new version of UNIFACE also introduced non-modal forms (which cannot be replicated using HTML) and component templates. There is a separate article on component templates which I built into my UNIFACE Framework.

Whilst my early projects with UNIFACE were all client/server, in 1999 I joined a team which was developing a web-based application using recent additions to the language. Unfortunately this was a total disaster as their design was centered around all the latest buzzwords which unfortunately seemed to exclude "efficiency" and "practicality". It was so inefficient that after 6 months of prototyping it took 6 developers a total of 2 weeks to produce the first list screen and a selection screen. Over time they managed to reduce this to 1 developer for 2 weeks, but as I was used to building components in hours instead of weeks I was not impressed. Neither was the client as shortly afterwards the entire project was cancelled as they could see that it would overrun both the budget and the timescales by a HUGE margin. I wrote about this failure in UNIFACE and the N-Tier Architecture. After switching to PHP and building a framework which was designed to be practical instead of buzzword-compliant I reduced the time taken to construct tasks from 2 weeks for 2 tasks to 5 minutes for 6 tasks.

I was very unimpressed with the way that UNIFACE produced web pages as the HTML forms were still compiled and therefore static. When UNIFACE changed from 2-Tier to 3-Tier it used XML forms to transfer data between the Presentation and Business layers, and the more I investigated this new technology the more impressed I became. I even learned about using XSL stylesheets to transform XML documents, but although UNIFACE had the capability of performing XSL transformations it was limited to transforming one XML document into another XML document but with a different format. When I learned that XSL stylesheets could actually be used to transform XML into HTML I did some experiments on my home PC and I became even more impressed. I could not understand why the authors of UNIFACE chose to build web pages using a clunky mechanism when they had access to XML and XSL, which is why I wrote Using XSL and XML to generate dynamic web pages from UNIFACE.

I wrote about this earlier in My career history - Another new language.

I could see that the future lay in web applications, but I could also see that UNIFACE was nowhere near the best language for the job, so I decided to switch to something more effective. I decided to teach myself a new language in my own time on my home PC, so I searched for software which I could download and install for free. My choices quickly boiled down to either Java or PHP. After looking at sample code, which was freely available on the internet, I decided that Java was too ugly and over-complicated and that PHP was simple and concise as it had been specifically designed for writing database applications using dynamic HTML.

I did not go on a course on how to become a "proper" OO programmer (whatever that means), instead I downloaded everything I needed onto my home PC, read the PHP manual, looked through some online tutorials and bought a few books. I knew nothing about any so-called "best practices" such as the SOLID and GRASP principles, nor about Design Patterns, so I just used my 20 years of previous programming experience, my intellect and my intuition to produce the best results that I possibly could. I gauged my success on the amount of reusable code which I produced which contributed directly to my huge gain in productivity.

In order to make the transition from using a procedural language to an object oriented one I needed to understand the differences. They are similar in that they are both concerned with the writing of imperative statements which are executed in a linear fashion, but one supports encapsulation, inheritance and polymorphism while the other does not, as explained below:

| Procedural | Object Oriented |

|---|---|

| You could define data structures in a central copy library and refer to it in as many programs or subprograms as you liked. I wrote a COPYGEN program which could read the data structures from the database or the formsfile and automatically produce COPYLIB entries. | There is no built-in equivalent of a copy library. PHP does not use rigid data structures, it uses dynamic arrays which are infinitely flexible. |

| You could put reusable code into a subprogram, with its separate DATA DIVISION and PROCEDURE DIVISION, and then call that subprogram from as many places as you liked. The problem was that when the "call" ended all working storage in the DATA DIVISION was lost. | ENCAPSULATION means that you can define a class containing as many properties (data variables) and methods (functions) as you like, and then instantiate that class into as many objects as you like. After calling a method on an object the object does not die, nor is its internal data lost. You can keep calling methods to either read or update its internal data, and that data will remain available until the object dies. |

| There is no inheritance. | INHERITANCE means that after defining a class you can create another class which "extends" (inherits from) the first class. In this structure the first class is known as a "superclass" while the second is known as a subclass. Both may contain any number of properties and methods. When the subclass is instantiated into an object the result is a combination of both the superclass and the subclass. The subclass may add its own set of extra properties and methods, or it may override (replace) those defined in the superclass. |

| There is no polymorphism. A function name can only be defined once in the entire code base, so it is not possible for the same function name to be used in multiple subprograms.This means that each function has a fixed implementation. | POLYMORPHISM means that the same method name can be defined in many classes, each with its own implementation. It is then possible to write code which calls a method on an unspecified object where the identity of that object is not specified until runtime. This is known as Dependency Injection. |

Another major difference concerned the construction of the screens in the user interface. In my previous languages it required the use of a separate program - FORMSPEC in COBOL and Graphical Form Painter (GFP) in UNIFACE - to design and then compile each screen. PHP is simpler because the user interface is an HTML document which is not compiled, it is nothing more than a large string of text containing a mixture of HTML tags and data values. PHP has a large collection of string handling functions which enable the text file to be output (using the echo statement) in fragments during the script's execution, but this requires the use of output buffering if you want to use the header() function to redirect to another script. An alternative would be to use some sort of templating engine which would construct and output a complete HTML document as a final act before the script terminates. No special code is necessary to receive HTTP requests as the data is automatically presented in either the $_GET or $_POST array.

Switching from one programming language to another means that you have to recognise and then deal with the differences. My first switch from COBOL to UNIFACE was relatively easy as they were similar, but switching to PHP was totally different. The similarities and differences are highlighted in the following table:

| COBOL | UNIFACE | PHP | |

|---|---|---|---|

| Paradigm | Procedural | Component-based | Object Oriented |

| Editor | Text | IDE | Text or IDE |

| Compiled | Yes - separately | Yes - within IDE | No - interpreted |

| Structs | Yes | Yes | No - dynamic arrays |

| Statically Typed | Yes | Yes | No - dynamic |

| Compiled screen | Yes - using FORMSPEC | Yes - using Graphical Form Painter | No - HTML |

| database data | record (struct) | struct | dynamic arrays |

| program data | stateful | stateful | stateless |

COBOL and UNIFACE were both compiled - with COBOL the source editing and compiling were done in separate programs, while with UNIFACE they were both done within the Uniface Development Environment (UDE).

COBOL and UNIFACE both used screens which were designed using special software and then compiled. At run time the compiled screen was referenced, and the data was sent back and forth using a struct (also known as records or composite data types). This caused them to be statically typed as each field's type had to be defined within the struct, and this also meant that no field could be overwritten with a value of the wrong type, nor could its type be changed.

COBOL and UNIFACE both used structs for reading database data.

With COBOL the structs used in the program source had to be defined manually, which sometimes lead to errors, while with UNIFACE each field's datatype was extracted automatically from the built-in Application Model.

The biggest differences between PHP and my earlier languages are as follows:

The significant difference is that PHP cannot use predefined structs for the transfer of data between itself and the client device and between itself and the database as neither the HTTP or SQL protocols use structs. The HTML document which is sent to the client device is a file containing nothing but one long string of text, and when a request is received from an HTML document all the values are returned in $_POST, which is an array of strings. When a SQL query is sent to the database that query is one long string of text, and when a response is returned all the values are presented in an array of strings. Arrays can therefore be regarded as dynamic structures as they can be built on the fly using values of any type.

Unlike a struct where the contents are predefined and fixed, which means that each screen or database table requires its own structure definition, for PHP both the outgoing and incoming data streams are dynamic. The outgoing HTML documents and SQL queries are both strings of text containing both labels and data while the incoming data appears as an associative array of field names and their associated values. The contents of an array does not have to be predefined therefore it is dynamic.

While the type of an element in a struct cannot be changed, which can be detected and reported at compile time, every element within a PHP array can have its type changed at anytime, and any type mismatch can only be detected at run-time. This does not (or should not) matter to PHP as it was designed so that scalars auto-convert depending on the context, thus making any manual conversion totally redundant. This behaviour is documented in RFC: Strict and weak parameter type checking.

If you look at the example of loose coupling you should see that the statement $dbobject->insertRecord($_POST); will work for any database table with whatever data is contained in the $_POST array. Note that the contents of this array will always be validated against the $fieldspec array within the object, and if any error is detected the insert will not be performed. Instead the user will be shown an error message and given the opportunity to correct the error.

Compiled languages which use predefined and static structures can be statically typed simply because external data will always be loaded into one of these predefined structures, so each data item will automatically have the type as it was defined in that structure. Any attempt to write code which tries to overwrite a data item with a value of the wrong type will fail at the compilation phase. Likewise if you use a data item as an argument on a function call, and that function expects a value of a different type then this can also be detected and rejected at the compilation phase.

PHP, on the other hand, does not use predefined and static structures as neither the HTTP nor SQL protocols support their use. Data is sent out as one long string of text, either as an HTML document or an SQL query string, and data comes in as a dynamic array where all the values are strings. Refer to $_POST and either mysql_fetch_assoc() or mysqli_fetch_row() for details. The only difference is that the database may return NULL values whereas an HTML form will return an empty string instead.

In PHP it is not necessary to convert any value from its original "string" type to another type before it can be used. This is because PHP's type system was designed from the ground up so that scalars auto-convert depending on the context. This means that if you pass a string to a function that expects an integer then PHP will use its type juggling capabilities to convert that value into the correct type. Note that this conversion will only happen within the function, the original value will not change its type. This behaviour is documented in RFC: Strict and weak parameter type checking which says:

Strict type checking is an alien concept to PHP. Type checking puts the burden of validating input on the callers of an API, instead of the API itself. Since typically functions are designed so that they're called numerous times - requiring the user to do necessary conversions on the input before calling the function is counterintuitive and inefficient. It makes much more sense, and it's also much more efficient - to move the conversions to be the responsibility of the called function instead.

The only time that type juggling would fail was if a value contained characters that were inconsistent with the expected type. Thus the string "123.45" can be coerced into a number while "10 green bottles" cannot. It is therefore incumbent on the programmer to check the validity of all user input at the earliest possible opportunity. Anyone who fails to do so has only themselves to blame. Their incompetence is not the fault of the language, it is theirs and theirs alone. All the tools are there, they just have to learn to use them.

This automatic coercion worked well in PHP up until version 8.1 when the core developers introduced a massive BC (Backwards Compatibility) break by deprecating the passing of NULL to parameters of any internal functions. Their argument for this BC break was given in PHP RFC: Deprecate passing null to non-nullable arguments of internal functions as:

Internal functions (defined by PHP or PHP extensions) currently silently accept null values for non-nullable arguments in coercive typing mode. This is contrary to the behavior of user-defined functions, which only accept null for nullable arguments. This RFC aims to resolve this inconsistency.

What these morons had failed to consider was that a change in version 7.1 allowed any argument in a user-defined function to be marked as nullable, thus aligning them with the behaviour of internal functions. The core developers then had the choice of two options:

One of these options creates a massive BC break for all userland developers. The other creates a massive amount of work to update every internal function in the manual. They chose the option which creates the least amount of effort on their part but a massive amount of effort for all userland developers. This is why I wrote The PHP core developers are lazy, incompetent idiots.

In my previous decades of experience I had designed and built multiple applications which contained numerous database tables and user transactions, so I had lots of practice at examining those components looking for similarities as well as differences. If the similarities can be expressed as repeatable patterns, either in behaviour or structure, and those patterns can be turned into code, then it should be possible to build applications at a faster rate by not having to spend so much time in duplicating the similarities. While is true that every subsystem uses different data which is subject to different business rules the software used to process that data has a large number of similarities:

Smart data structures and dumb code works a lot better than the other way around.

In a large ERP application, such as the GM-X Application Suite, which is comprised on a number of subsystems, each subsystem has a unique set of attributes:

Despite the fact that these two areas are completely different for each subsystem, they each have their own patterns and so can be handled using standard reusable code provided by the framework:

Note that each user transaction can be generated from a function within the Data Dictionary by combining a table class (Model) with a Transaction Pattern, which combines a particular Controller with a particular View.

I started by building a small prototype application as a proof of concept (PoC). This used a a small database with just a few tables in various relationships - one-to-many, many-to-many, and a recursive tree structure. I then set about writing the code to maintain the contents of these tables. As a follower of the KISS principle I started with the basic functionality and and added code to deal with any complexities as and when they arose.

For the first database table I creating a single Model class, without using any inheritance, which contained the following:

insertProduct() and InsertOrder(). It was obvious to me that unique method names could not be shared and reused whereas common method names could. Each of these methods was broken down into a series of separate steps, as shown in common table methods.take this array of data items and build me a query.

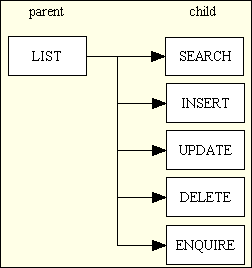

I then created a set of scripts for the Presentation layer which performed a single task each, just as I had done in my COBOL days. This then resulted in a group of scripts such as those shown in Figure 5

Figure 5 - A typical Family of Forms

Note that each of the objects in the above diagram is a hyperlink.

In this arrangement the parent screen can only be selected from the menu bar while the child screens can only be selected from the navigation bar which is specific to that parent.

Each of these scripts is a separate Controller which performs a single task (user transaction). They access the same Model, but each calls a different combination of the common table methods, plus any local "hook" methods, in order to achieve the desired result. Each can only produce a single output (View) which is returned to the user.

As I had already decided to build all HTML documents using XSL transformations I built a View using a group of functions to carry out the following at the very end of the script after all the processing in the Model(s) had been completed:

In my prototype these were available as a collection of separate functions, but later on I turned them into methods within a View object.

I hit a problem when I converted the contents of the $_POST array into an SQL INSERT query as it did not recognise the entry for the submit button as a valid column name, which caused the query to fail. I needed to edit the contents of this array to remove any names which did not exist in that table. I got around this problem initially by creating a class property called $fieldlist which I manually populated in the class constructor with a list of valid column names. I then modified the code which built the SQL query to filter out anything in $fieldarray which did not also exist in the $fieldlist array.

I then realised that if I did not validate the contents of $fieldarray before passing it to the query builder it would cause a problem if a column's value did not match that column's specifications. I started to manually insert code to validate each column one at a time, but then I realised that columns with the same data type required the same validation code and the same error message. Instead of repeating this similar code over and over again I decided to replace the $fieldlist array, which was just a list of field names, with the $fieldspec, which is a list of field names and their specifications. This then allowed me to create a standard validation object which required two input variables - $fieldarray and $fieldspec. The output from this object is an array of error messages, with the field/column name as the key and the message as the value. An empty array means no errors, but there could be numerous error messages for several fields.

After finishing the code for the first table I then created the code for the second table. I did this by copying the code and then changing all the table references, but this still left a large amount of code which was duplicated. In order to deal with this I created an abstract class which I then inherited from each table class. I then moved all the code which was duplicated from each table class into the abstract class, and when I had finished each class contained nothing but a constructor as all processing was inherited from the abstract class.

Note that what I did *NOT* do was to inherit from the first concrete class for table#1 to create another concrete class for table#2. Why not? Because I could see that class#1 contained information that needed to be changed in class#2, that information being the table's structure. I also knew that if I added some unique processing into class#1 which I did not want to be shared with other classes then it would create problems. This is precisely why I created an abstract class which contained only those methods which I wanted to be shared. This is also why I avoided the problems encountered by other programmers which caused them to say favour composition over inheritance.

Years later I discovered that this approach had already been recommended in a paper called Designing Reusable Classes which was published as long ago as 1988 by Ralph E. Johnson & Brian Foote which can be summarised as follows:

Abstraction is the act of separating the abstract from the concrete, the similar from the different. An abstraction is usually discovered by generalizing from a number of concrete examples. This then permits a style of programming called programming-by-difference in which the similar protocols can be moved to an abstract class and the differences can be isolated in concrete subclasses.

I discuss this paper in more detail in The meaning of "abstraction".

I still had duplicate Controller scripts, but I noticed that the only difference between them was the hard-code table (Model) name. I quickly discovered that I could replace the following code:

require "classes/foobar.class.inc"; $dbobject = new foobar;

with the following alternative:

require "classes/$table_id.class.inc"; $dbobject = new $table_id;

This then enabled me to supply the missing information from a separate component script which resembles the following:

<?php $table_id = "person"; // identify the Model $screen = 'person.detail.screen.inc'; // identify the View require 'std.enquire1.inc'; // activate the Controller ?>

Each task in the application has its own version of this script. The View component is a screen structure script.

While the framework can take care of all standard processing there will always be times when you will want to perform some additional processing or data validation that cannot be performed automatically. The standard processing flow is handled by the methods in the abstract table class, so what is needed is a mechanism where you can say "when you get to this point in the processing flow I want you to execute this code". This is where my use of an abstract table class provided a simple and elegant solution. My experiments with inheritance had already proved to me that when you inherit from one class (the superclass) into another (the subclass) the resulting object will contain the methods from both classes. The method in the superclass will be executed unless you override it in the subclass. This means that in certain points of the processing flow I can call a method which is defined in the superclass but which does nothing, but if I want to I can copy that method into my subclass and insert whatever code is necessary. This then replaces at runtime a method in the superclass which does nothing with a method in the subclass which does something. To make it easy to identify such methods I gave them a "_cm_" prefix which stands for customisable method. Some of them also include "pre_" or "post_" in the prefix to identify that they are executed either before or after the standard method of that name. Some examples can be found at How do you define 'secondary' validation?

It wasn't until many years later that I discovered that what I had done was known as the Template Method Pattern and that my customisable methods were actually called "hook" methods.

In a relational database it is highly likely that some tables will be related to other tables in what is known as a one-to-many or parent-child relationship. This relationship is identified by having a foreign key of the child table which points to the primary key of an entry on the parent table. An entry on the parent table can be related to many entries on the child table, but a child can only have a single parent. Note that a one-to-one relationship can be defined by making the definition of the foreign key the same as the child's primary key.

Where a parent-child relationship exists it is often necessary to go through the parent before being able to access its children. To deal with this situation I emulated the practice I had encountered in UNIFACE which was to create a form component which accessed both tables as shown in Figure 3. In this structure the parent/outer entity is read first, and the relationship details which have been defined in the Application Model are used to convert the primary key of the parent into the foreign key of the child. To do this in PHP all I had to do was construct a new Controller which accessed two Model classes in the same sequence. Some programmers seem to think that a Controller can only access a single Model, or that relationships should be dealt with using Object Associations, but as I had never heard of these "rules" I did what I thought was the easiest and most practical.

The same 2-Model Controller, as described in the LIST2 pattern, can also be used even if you have a hierarchy of parent-child relations as you never create a single task or a single object to manage the entire hierarchy, you have a separate task to deal with each individual parent-child relationship. It is the Controller for that task that manages the communication between the parent and child entities, not any code within those entities. In order to navigate your way up and down the hierarchy you can start with the task which manages the pair of related tables at the top, then, after selected a child row on that screen you press a navigation button which activates a different task which shows that child row as the parent and a set of child rows from a different table.

This prototype application, which I published in November 2003, had only a small number of database tables with a selection of different relationships, but the code that I produced showed how easy it was to maintain the contents of these tables using HTML forms. Although it had no logon screen, no dynamic menus and no access control, it did include code to test the pagination and scrolling mechanism, and the mechanism of passing control from one script to another and then back again.

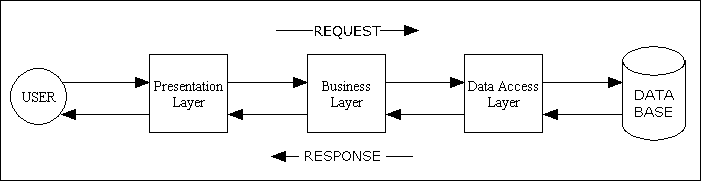

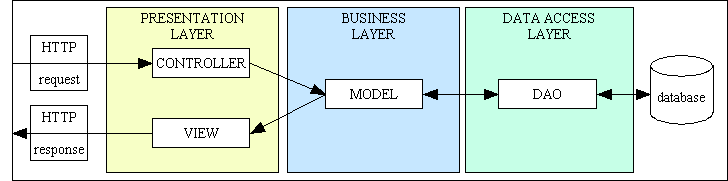

As I had seen the benefits of the 3 Tier Architecture during my work with UNIFACE I decided to adopt the same architecture for PHP. This was made easy as programming with objects automatically forces the use of two separate objects - one containing methods and another to call those methods. This architecture has all database access carried out in a separate object which contains APIs for a specific DBMS, as shown in Figure 6 below.

Figure 6 - Requests and Responses in the 3 Tier Architecture

This allows, in theory, an object in any one of the layers to be swapped with a similar object with a different implementation without having an effect on any of the objects in the other layers. For example, the object in the Data Access layer can be swapped with another in order to access a different DBMS. This came in useful when MySQL version 4.1 was released as it had the option of using a different set of "improved" APIs. Some programmers claim that this functionality is rarely used because once an organisation has chosen a DBMS it is unlikely to switch to an alternative. These people are short sighted as they are not considering the phrase "once chosen". My framework is used by others to build their own applications, so I allow them to choose which DBMS they would prefer to use before they start building their application.

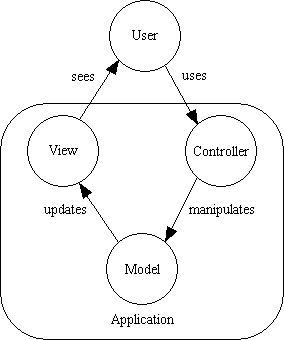

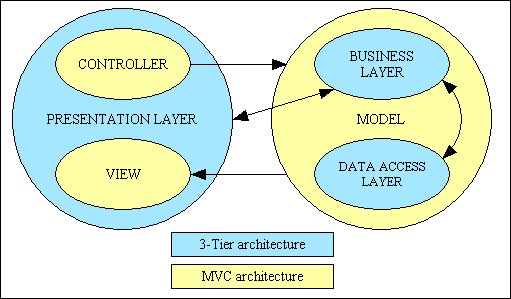

I later discovered that as a consequence of splitting my Presentation layer into two separate objects - a Controller and a View - I had in fact implemented a version of the Model-View-Controller (MVC) design pattern. This uses the structure shown in Figure 7 below:

Figure 7 - The basic MVC relationship

Note that in my implementation the Model does not send its changes to the View. When the Model(s) have finished their processing the Controller injects them into the View which then sucks out the data using a single call to the getFieldArray() method. The View will then rebuild its output entirely from scratch.

This combination of the two architectural patterns causes one to overlap with the other, as shown in Figure 8 below:

Figure 8 - The MVC and 3-Tier architectures combined

An alternative diagram which shows the same information in a different way is shown in Figure 9 below::

Figure 9 - MVC plus 3 Tier Architecture combined

Note that each of the above boxes is a hyperlink which will take you to a detailed description of that component.

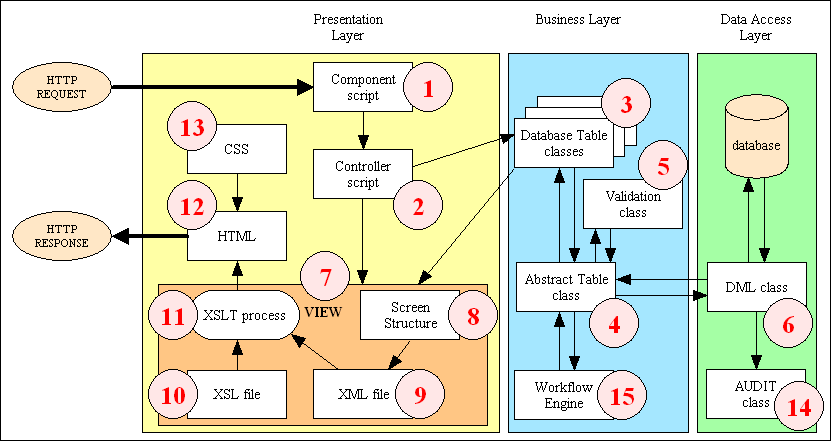

A more detailed diagram which includes all the software components is shown in Figure 10.

Figure 10 - Environment/Infrastructure Overview

Note that each of the above numbered boxes is a hyperlink which will take you to a detailed description of that component.

My prototype application was very basic as it allowed the user unrestricted access to all of its components, but it was missing the following:

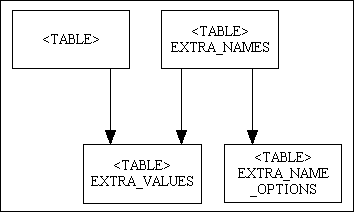

My next step was to take my old MENU database and construct a new version using MySQL. This started off with just the following tables:

I then built or modified the following components:

Following on from my previous experience I knew that a system is actually made up of a number of subsystems which should be integrated as much as possible so that they can share data. For example, you don't want a CUSTOMERS table in the ORDER subsystem and a separate CUSTOMERS table in the INVOICE subsystem and the SHIPMENT subsystem - it would be much more efficient to have the data stored once so that it can be shared instead of being duplicated. Rather than have the components for each subsystem all mixed together in the place I decided to keep then separate as much as possible so that I could add (or even remove) subsystems without having too much effect on other subsystems. To accomplish this I decided on the following:

In this way it is possible to create a new subsystem on one machine, zip up the contents of its subsystem directory, copy that zip file to another machine with a RADICORE installation, then import the details into that installation so that it can be run on that second machine. This facility is demonstrated on the Prototype Applications page which allows a collection of sample applications to be loaded into any RADICORE installation.

While working on the prototype, and later the framework, I encountered several problems which I eliminated with a little refactoring.

To mirror the message catalog which I created in my COBOL days I created files to hold text in different languages which are accessed using the getLanguageText() and getLanguageArray() functions. These are now part of my Internationalisation feature.

To mirror the error handler which I created in my COBOL days I created a version for PHP.

Instead of being forced to use a separate screen for entering search criteria I added a QuickSearch area into the title area of all LIST screens.

Like in all my software endeavours I start by writing things out in long-hand, then look for repeating patterns which I can move to some sort of reusable library. Anybody who is familiar with HTML knows that there is a fixed set of input controls each of which uses a fixed set of HTML tags, so it would be useful to output each set of tags using a reusable routine. With XSL you can define a named template which you can call using <xsl:call-template>. You can either define a template within the stylesheet, or you can put it in a separate file which you can <xsl:include>.

I started off by creating a separate XSL stylesheet for each different screen so that I could identify which element from the associated XML document went where, and with what control. After a while I found this rather tedious, so I did a little experimenting to see if I could define the structure I wanted within the XML document itself, then get the XSL stylesheet to build the output using this structure. With a little trial and error I got this to work, so my next task was to define this structure in a customisable PHP script which could then be copied into a corresponding <structure> element within the XML document. Originally I created each of the PHP scripts by hand, but after I built my Data Dictionary I built a utility to generate them for me.

This meant that I no longer had to manually construct a separate XSL stylesheet for each screen with its unique list of data elements as I could now supply that list in a separate file which could then be fed into a small number of reusable stylesheets. When I say "small" I mean a library of just 12 stylesheets and 8 sets of templates. I have used this small set of files to create a large ERP application with over 4,000 different screens, which represents a HUGE saving in time and effort.

This is discussed in more detail in Reusable XSL Stylesheets and Templates.

What is a transaction? This term is short for user transaction. It identifies a task that a user may perform within an application. Some complex tasks may require the execution of several smaller tasks in a particular sequence. Some of these user transactions may include a database transaction. In the RADICORE framework a task is implemented using a combination of different components - A Controller, one or more Models, and an optional View. Each task has its own component script which identifies which combination of those components will be required to process that task.

What is a pattern? It is a theme of recurring elements, events or objects, where these elements repeat in a predictable manner. It can be a template or model which can be used to generate things or parts of a thing. In software a pattern must be capable of being defined in a piece of code which can be maintained in a central library so that the pattern can be referenced instead of being duplicated. The avoidance of duplication is expressed in the Don't Repeat Yourself (DRY) principle.

What is a Transaction Pattern? It is a method of defining a matched pair of pre-written and reusable Controllers and Views which can be linked with one or more different Models in order to provide a working transaction. This provides all the common boilerplate code to move data between the User Interface (UI) and the database, and allows custom business logic to be added to individual Model classes using "hook" methods.

Why is it different from a Design Pattern? A design pattern is nothing more than an outline description of a solution without any code to implement it. Each developer has to provide his own implementation, and it is possible to implement the same pattern many times, each with different code. A design pattern has limited scope in that it can only provide a small fragment of a complete program, so several different patterns have to be joined together in order to produce a working program. Conversely each Transaction Pattern uses pre-written components which makes it possible to say combine Pattern X with Model Y to produce Transaction Z

and the end result will be a working transaction. Another difference is that Design Patterns are invisible from the outside as you cannot tell what patterns are embedded in the code when you run the software. This is not the case with Transaction Patterns as simply by observing the structure of the screen and understanding the operations that the user can perform with it you can determine which Pattern was used.

Note that there is currently only one set of components which implement these Transaction Patterns, and these are built into the RADICORE framework

After having personally developed hundreds, if not thousands, of user transactions which performed various CRUD operations on numerous database tables using numerous different screens I began to see some patterns emerging. This caused me to examine all these components looking for similarities and differences with a view to making reusable patterns for those similarities as well as a way to isolate the differences. My investigations, which are documented in What are Transaction Patterns, led me to identify the following categories:

This can be boiled down into the following:

I noticed that different combinations of structure and behaviour were quite common, so I created a different pattern for each which is now documented in Transaction Patterns for Web Applications. All you have to do to create a working transaction is to combine a pattern with a Model.

Each user transaction is comprised of a combination of the components shown in Figure 9. The following reusable components are pre-built and supplied by the framework:

The following components are built by the application developer, originally by hand, but now using functions within the Data Dictionary:

<?php $table_id = "person"; // identify the Model $screen = 'person.detail.screen.inc'; // identify the View require 'std.enquire1.inc'; // activate the Controller ?>As you can see it does nothing but identify which Model is to be combined with which View and which Controller. Each URL points directly to one of these scripts on the file system, thus avoiding the need for a front controller.

Each transaction also requires the following database updates:

Initially each of the above disk files had to be created manually, but after I built my Data Dictionary I built components into the framework to generate them for me. I can now create working transactions in a short period of time simply by pressing a few buttons, and this has contributed greatly to my increase in productivity.

Originally I created the table class files, table structure files, component scripts and screen structure scripts by hand as they were so small and simple, but after doing this for a while on a small number of tables and with the prospect of many more tables to follow I realised that the entire procedure could be speeded up by being automated. Where UNIFACE had an internal database known as an Application Model to record the structure of all the application databases I created my own version which I called a Data Dictionary. However, I changed the way that it worked:

In this way I do not have to access the Data Dictionary from within a table object in order to extract the information which I need as it has already been extracted and placed in a disk file. The contents of this file are loaded into the object's common table properties when the loadFieldSpec() method is called in the class constructor.

If the structure of any table is changed the process of making these changes available to the table objects is very simple and straightforward:

Note that UNIFACE allowed default trigger code to be defined in the Application Model which would be automatically "inherited" when an entity was copied into a form/service component, but no further inheritance was possible.

Note that when a table's information is exported from the Data Dictionary it is only the table structure file which will be overwritten so as not to lose any custom code which has been inserted into any of the "hook" methods.

While the objectives may have been the same, the way in which those objectives were implemented was totally different, with my PHP implementation being much faster. While it took some effort and ingenuity to build the PHP implementation, I considered this effort to be an investment as it reduced the time taken to generate table classes and the tasks needed to maintain the contents of those tables. This is why I was able to create my first ERP package containing six databases in just six months - that's one month per database.

In May 2004 I published A Role-Based Access Control (RBAC) system for PHP which described the access control mechanism which I had built into my framework. This provoked a response in 2005 when I received a query from the owner of Agreeable Notion who was interested in the functionality which I had described. He had built a website for a client which included a number of administrative screens which were for use only by members of staff, but he had not included a mechanism whereby access to particular tasks could be limited to particular users. He had also looked at my Sample Application and was suitably impressed. Rather than trying to duplicate my ideas he asked if he could use my software as a starting point, which is why in January 2006 I released my framework as open source under the brand name of RADICORE.

Unfortunately he spent so much time in asking me questions on how he could get the framework to do what he wanted that he decided in the end to employ me as a subcontractor to write his software for him. He would build the front-end website while I would build the back-end administrative application. I started by writing a bespoke application for a distillery company which I delivered quite quickly, which impressed both himself and the client. Afterwards we had a discussion in which he said that he could see the possibility of more of his clients wanting such administrative software, but instead of developing a separate bespoke application for each, which would be both time consuming and costly, he wondered if I could design a general-purpose package which would be flexible enough so that it could be used by many organisations without requiring a massive amount of customisations. This was the start of a collaboration between my company RADICORE and his.

This package was given the name TRANSIX, which was derived from the name of another company he owned called Transition Engineering.

I knew from past experience that the foundation of any good database application is the database itself, and that you must start with a properly normalised database and then build your software around this structure. This knowledge came courtesy of a course in Jackson Structured Programming which I took in 1980. I had recently read a copy of Len Silverston's Data Model Resource Book, and I could instantly see the power and flexibility of his designs, so I decided to incorporate them into this ERP software. I started by building the databases for the following subsystems:

After building each of these databases I then imported their table structures into my Data Dictionary from which I created the class file for each table. Then I created the starting transactions for dealing with those tables. The framework allowed me to quickly develop the basic functionality of moving data between the user interface and the database so that I could spend more time writing the complex business rules and less time on the standard boilerplate code. Adding the business rules involved inserting the relevant code into the relevant "hook" methods as discussed in Adding custom processing.